I am trying to implement the Deep Image Analogy https://arxiv.org/pdf/1705.01088.pdf paper.

The part that I am unable to get to work is the Deconvolution operation that they used (not the same as deconv layer). The logic is as follows:

- Initialise noise

- Pass through a subset of layers from VGG

- Calculate MSE with an existing activations of the same feature map (of another image)

- Backpropagate, and update noise

For a forward pass through a subset of VGG , this is what I am using:

def forward_subnet(self, input_tensor, start_layer, end_layer):

for i, layer in enumerate(list(self.model)):

if i >= start_layer and i <= end_layer:

input_tensor = layer(input_tensor)

return input_tensor

My ‘deconv’ function looks like this :

def get_deconvoluted_feat(feat,feat_layer_num,iters=13):

feat = cn_first(feat)

feat = torch.from_numpy(feat)

if feat_layer_num == 5:

start_layer,end_layer = 20,29

noise = np.random.normal(size=(1,512,28*1,28*1),scale=3)

elif feat_layer_num == 4:

start_layer,end_layer = 11,20

noise = np.random.normal(size=(1,256,56*1,56*1),scale=3)

elif feat_layer_num == 3:

start_layer,end_layer = 6,11

noise = np.random.normal(size=(1,128,112*1,112*1),scale=3)

elif feat_layer_num == 2:

start_layer,end_layer = 1,6

noise = np.random.normal(size=(1,64,224*1,224*1),scale=3)

else:

print("Invalid layer number")

noise = Variable(torch.from_numpy(noise).float(),requires_grad=True)

noise = noise.cuda()

noise = nn.Parameter(noise.data.clone(),requires_grad=True)

optimizer = optim.LBFGS([noise],max_iter=1350,lr=1)

if not next(model.model.parameters()).is_cuda:

model.model = model.model.cuda()

feat = Variable(feat).cuda()

loss = nn.MSELoss(size_average=False)

def closure():

optimizer.zero_grad()

output = model.forward_subnet(input_tensor=noise,start_layer=start_layer,end_layer=end_layer)

loss_valu = loss(output,feat)

loss_valu.backward()

loss_hist.append(loss_valu.data.cpu().numpy())

return loss_valu

loss_hist = []

for i in range(iters):

optimizer.zero_grad()

optimizer.step(closure)

pylab.rcParams['figure.figsize'] = (10, 10)

plt.plot(loss_hist)

plt.show()

noise_cpu = noise.cpu().data.squeeze().numpy()

del feat

del noise

return cn_last(noise_cpu)

The optimised noise is then passed via Patchmatch (which i am fairly confident works)

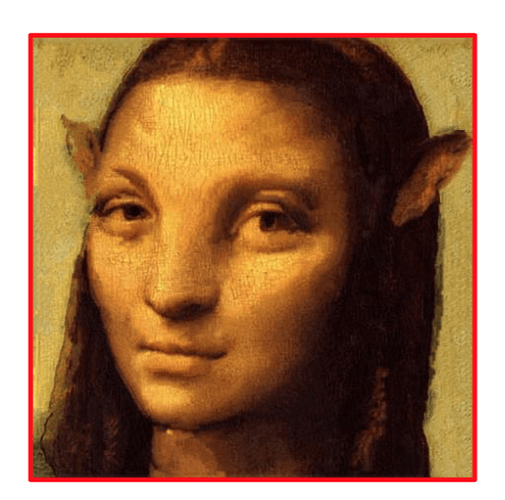

The output of my whole framework is(This was done on ADAM optimiser, not LBFGS,using LBFGS gives garbage-ish results):

as compared to :

This is a tensorflow version that works identical to the paper :

If anybody needs more information , ill be more than happy to provide that.

This is a great project, and I want to port it to pytorch real bad. thanks