Autograd doesn’t work for torch.linalg.lstsq when dealing with complex tensors. Example:

A = torch.complex(torch.tensor([[1.,0.],[0.,1.]]), torch.tensor([[1.,0.],[0.,1.]]))

b = torch.complex(torch.tensor([2.,3.]), torch.tensor([1.,0.]))

A.requires_grad = True

b.requires_grad = True

x = torch.linalg.lstsq(A,b).solution

print(x) # gives tensor([1.5000-0.5000j, 1.5000-1.5000j], grad_fn=<LinalgLstsqBackward0>)

x[0].backward()

Results in IndexError: Dimension out of range (expected to be in range of [-1, 0], but got -2).

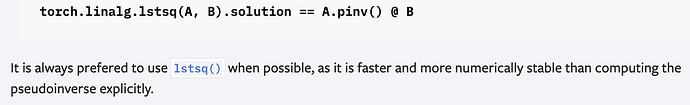

Note: I can achieve the same result for x via torch.linalg.pinv(A) @ b, and autograd does work for pinv. However, I am curious why the lstsq autograd doesn’t work, and also because according to the documentation: