Hello, PyTorch community.

For the past few weeks I have been struggling real hard to make a piece of code work, something I supposedly thought to be a trivial task. Here it is:

I. The task.

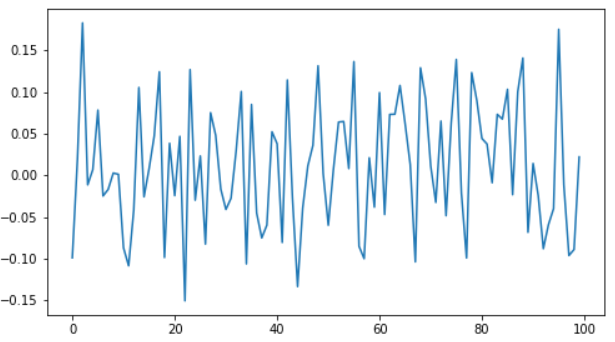

- Download some Bitcoin data and pre-process it. (Transform closing prices into returns).

- Slice the BTC data set in windows of length 100.

- Define a VAE architecture, so I could use the decoder to simulate time series.

II. The problem.

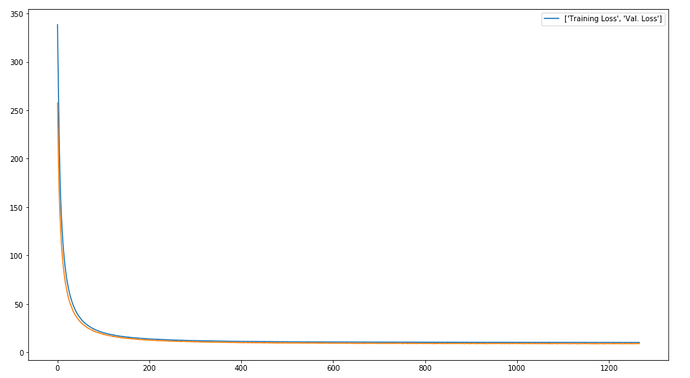

First I started with LSTM and GRU layers. Somehow, I wasn´t sure if my model was learning something at all since it seemed the results were flattening, no matter how much I tweaked the hyperparameters. Then before going for other more sophisticated layers (Conv1d), I decided just to go for Linear layers (the code I present below), just to test if it is able to work.

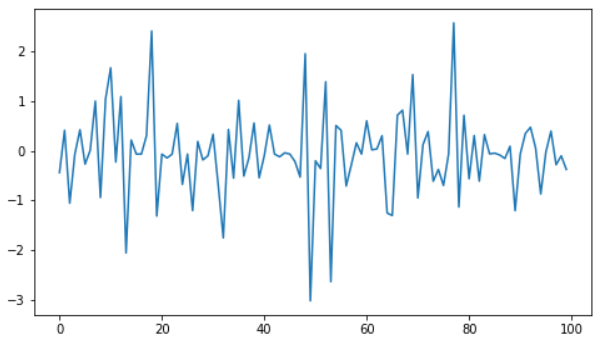

Somehow, the output of the decoder part does indeed look somehow a time series. However, the scale is different from the input (similar to: The network output matches the target curve but with a different scale - #15 by vmirly1).

I tried: Increasing the number of epochs, adding more layers (linear transformations), changing learning rate, etc, etc. The results are the same. It looks like a time series, but in a very different scale.

Definitely, I am new to PyTorch, I was wondering if someone could please shed a light in this matter because cause literally I don´t know what else to try. I am uploading my Notebook. I would really appreciate if someone could take a look!

Colab Link: Google Colab

I thank you for your time!