Hi, when train my model on a GPU and save weights.

Loading the saved weights on CPU, it lacks accuracy and doing so bad.

Is it normal?

No, that’s not expected.

Comapre the outputs of a static tensor (e.g. torch.ones) on the GPU vs. CPU after calling model.eval() and before saving the state_dict. If these are close (up to floating point precision), compare the outputs again after loading the model.

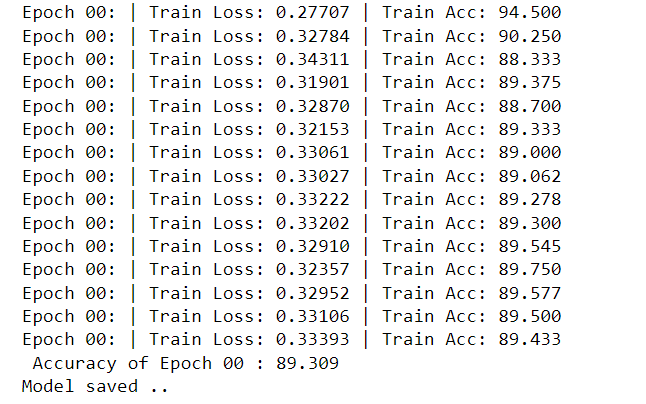

wright now, I am training a sign language model on mu own created dataset

the traing accuracy is like that

the validation accuracy is like the same

but in testing code it is so bad

any hint

and this the testing code

#test

img = cv2.imread(r"C:\Users\Ali\Sign_Language_Project\test\20211229_234757.jpg")

img = cv2.resize(img , (512,512))

plt.imshow(img)

img_ten = torch.from_numpy(img)

img_ten = img_ten.to(device)

img_ten = torch.permute(img_ten , (2,1,0))

img_ten = torch.unsqueeze(img_ten,0)

img_ten = img_ten.type(torch.cuda.FloatTensor)

pred = m(img_ten)

y_pred_softmax = torch.log_softmax(pred, dim = 1)

_, y_pred_tags = torch.max(y_pred_softmax, dim = 1)

print(y_pred_softmax)

print(y_pred_tags)

print("prediction is: " , class_names[y_pred_tags])

could explain more

I pass a tensor of one to the model then comapre results?

Yes, exactly. Passing a static input such as torch.ones using the GPU as well as the CPU and comparing the results before and after saving could give us a hint where the issue in your code might be.

E.g. if the results are equal before saving but differ after loading the model, it could point to an issue of the model loading itself.

on CPU before loading

tensor([[ 1.8160, -6.7850, 5.1841, -3.4524, -7.2334, -1.0573, -8.7593,

-7.5111, -5.3923, -8.3448, -6.9471, -11.9200, -5.1619, -1.3001,

-9.2441, -16.4653, -6.6711, -6.5694, -10.5172, -4.6673, -8.1703,

-8.2364, -14.3871, -14.1084, -0.4286, -5.9760, -8.5350, -9.5102,

-5.8201, -13.8306, -8.8035, -4.9221, -4.7478, -7.7635, -2.6563,

-6.3988, -3.0070, -3.6580]], grad_fn=)

on GPU before loading

tensor([[ -5.4878, -7.7286, -4.0034, -3.4907, -7.5340, -6.5319, -15.8143,

-7.1648, -12.7269, -14.6478, -14.2912, -14.9882, -18.0514, -5.6059,

-6.8539, -18.3004, -9.7909, -5.0261, -11.3465, -3.6464, -8.3862,

-12.2398, -18.5062, -15.1331, -2.9380, -6.7910, -14.1230, -8.9430,

-15.2894, -10.7991, -0.5428, -7.2093, -4.6537, -6.5283, -7.3082,

-17.5702, -5.5608, -7.3001]], device=‘cuda:0’,

grad_fn=)

Could you post the model definition as well as the output of python -m torch.utils.collect_env, please?

Also, I assume you’ve called model.eval() before running the comparison as previously described?

Python platform: Windows-10-10.0.19042-SP0

Is CUDA available: True

CUDA runtime version: 10.2.89

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 2060

Nvidia driver version: 466.11

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Thanks, we would still need the model definition, please.

m = models.resnet50(pretrained=True)

num_ftrs = m.fc.in_features

m.fc = nn.Linear(num_ftrs, 38)

Thanks. I cannot reproduce the issue using 1.10.2+cu113 on a TitanV and RTX2080Ti using this code snippet:

import torch

import torch.nn as nn

import torchvision.models as models

m = models.resnet50(pretrained=True).eval()

num_ftrs = m.fc.in_features

m.fc = nn.Linear(num_ftrs, 38)

x = torch.ones(10, 3, 224, 224)

out = m(x)

m.cuda()

x = x.cuda()

out_gpu = m(x)

print((out_gpu.cpu() - out).abs().max())

and get abs. errors of ~1e-6.