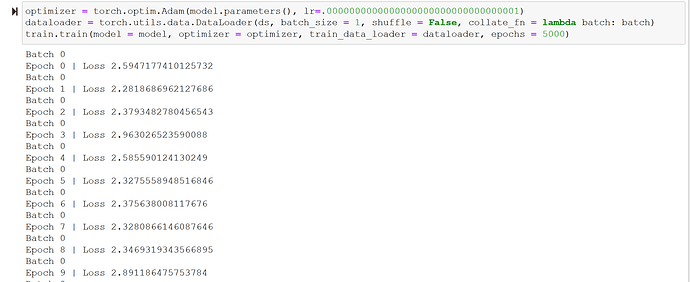

I am trying to overfit my model to 1 sample, but once the loss gets near 2, it just jumps around between 2 and 4 and doesn’t get better. I thought it was because my learning rate was too high, but no matter how low I set my learning rate, the jumps stay the same:

Based on how SGD/Adam works, I thought it should be guaranteed to find parameters that can overfit 1 sample. What could be causing this?

Details about the model:

I’m implementing this paper https://arxiv.org/pdf/2002.03230.pdf, which involves generating a graph like this: At the first step, select the first component. Then, in every other step: select a new component to attach to an existing component, select the nodes where it can be attached, and finally select the specific pair of nodes where they will be attached.

I make the selections by sampling from the output of softmaxed MLPs. To train, I use NLLLoss with reduction=None, and add self.loss(mlp_log_probs, target_idxs) to a losses list each time I make a selection. At the end, I do sum(losses) and backpropagate.