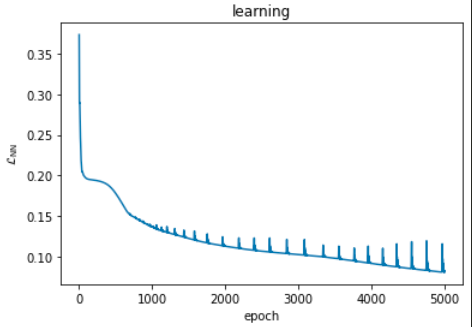

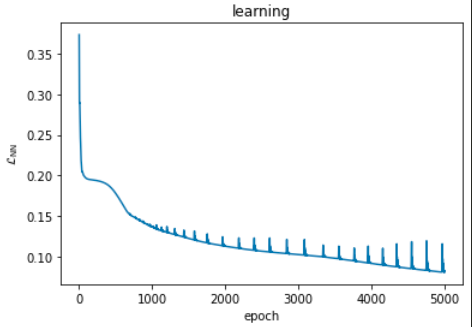

Hello, what to do when the loss starts to jump all over during learning?

Thank you

Hello, what to do when the loss starts to jump all over during learning?

Thank you

@ArkadyBogdanov

Greetings,

By learning I assume you mean…training. Most of the times when this happens is because you have a very small learning rate so your model tries to “approximate” thus slowly reaching to a local minimum to the point the loss is starting to increase again.

Unfortunately you aren’t providing much information so I can’t conclude to something. In general try to change the hyperparameters of your model.