I’m working on 3d landmark detection model

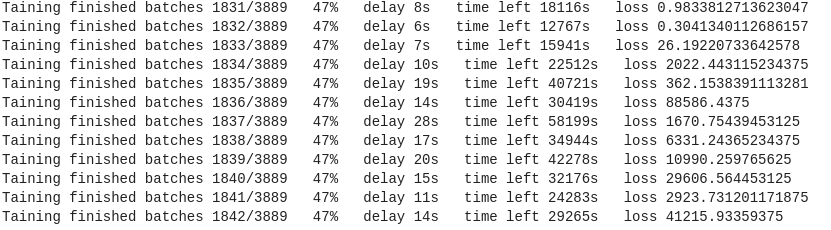

the training process works very well for el first 2 epochs and at the middle of the epoch 3 the loss starts to increase in an insane way and model results get much worth than it’s previous

what could be the reason of this

def train(train_loader, model, optimizer, criterion, controller):

model.train()

t = time()

train_loss = controller.current_train_loss

for i, data in enumerate(train_loader):

images = data['image']

if images.shape[1] == 0:

print(f"\rTaining finished batches {i+1}/{len(train_loader)} {int(((i+1)/len(train_loader))*100)}%", end='')

continue

targets = data['keypoints']

targets = targets.view(targets.size(0), -1)

images = images.type(torch.FloatTensor)

targets = targets.type(torch.FloatTensor)

images = images.to(device)

targets = targets.to(device)

optimizer.zero_grad()

output = model(images)#[0] for 2d model

loss = criterion(output, targets)

loss.backward()

optimizer.step()

train_loss += 1/(i + 1) * (loss.data - train_loss)

# print(targets.shape, output.shape)

del images

del targets

torch.cuda.empty_cache()

delay = time() - t

print(f"\rTaining finished batches {i+1}/{len(train_loader)} {int(((i+1)/len(train_loader))*100)}% delay {int(delay)}s time left {int(delay* (len(train_loader)-(i+1)))}s loss { loss.item()}",end='\n')

if i%10 == 0:

controller.update_batch_info(i, model, optimizer, train_loss)

t = time()

return train_loss