I’m trying to apply the facenet paper myself.

I’ve stumbled upon a problem, My loss is always constant.

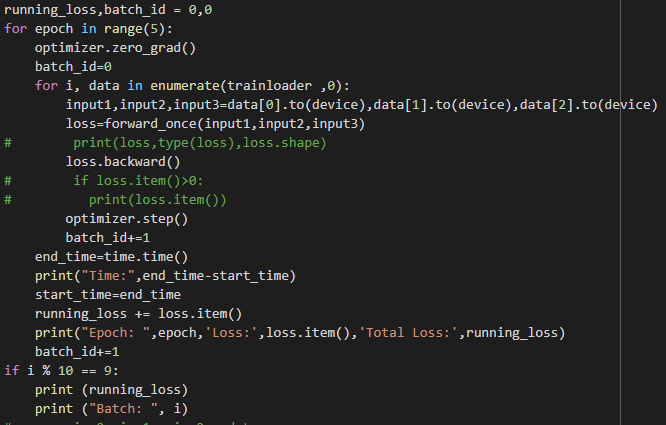

This is my code for training my NN

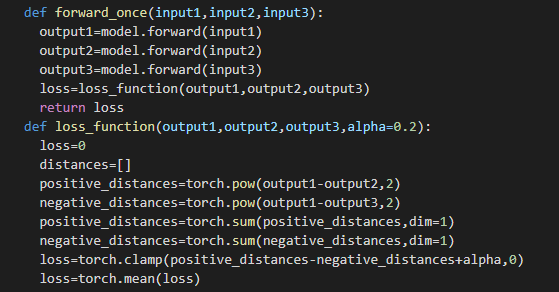

This is my forward and loss function

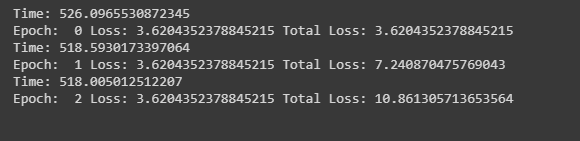

For some reason the loss is always coming out to be constant and the model isn’t learning.

This can be seen in the image given below that the loss is constant in every epoch.

Please if someone knows the solution, do tell me as I require to complete the project in a very small time.

Try adding optimizer.zero_grad() in the batch loop

In your code your’e accumulating gradients in the batch loop . So you need to clear gradients in the batch loop rather than in the epoch loop

1 Like

Hey there, where did you place the optimizer.zero_grad() line in the batch loop? I am having the same issue, however I have the zero_grad() function being called.