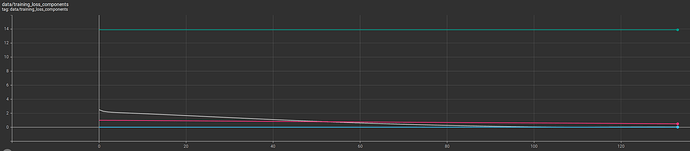

I am trying to move a model from Tf1 to Torch. The model is quite involved and I have been unable to get a portion of it to work. In particular, I have found that a function appears to return a result in PyTorch that is around 10% off the result the equivalent function in Tensorflow or Numpy.

I believe that this 10% difference is an error that impacts my loss function and prevents the model from learning.

I have isolated the function here and show both the torch and numpy ‘equivalents’. Attached is a link to the torch model and the comparison data needed. Below are two code segments. I believe the Numpy result is the better one because it both agrees the Tensorflow v1 result to an accuracy of 10e-05 and in the model I’m dealing with, this function trains successfully when the Torch equivalent does not.

My question is in two parts:

- How come the Numpy function returns better results than the Torch function and

- is there away of arranging the Torch function so it has accuracy closer to the Numpy function.

Regards,

Simon

The data needed to run this review is saved here:

https://drive.google.com/file/d/1lClIUWuHDGtibSXN2h5X-cyMaalU-cbX/view?usp=sharing

The full torch model is saved in a pickle for use with torch.load:

https://drive.google.com/file/d/1bFJYC5bHme7YmIbqTOjaxXvd-yrKczxH/view?usp=sharing

The data load and two functions:

import pickle

from typing import Dict, Any

import numpy as np

import torch

with open('recovered_autoencoder_network.pkl', 'rb') as f:

recovered_autoencoder_network = pickle.load(f)

# parameters needed for this issue

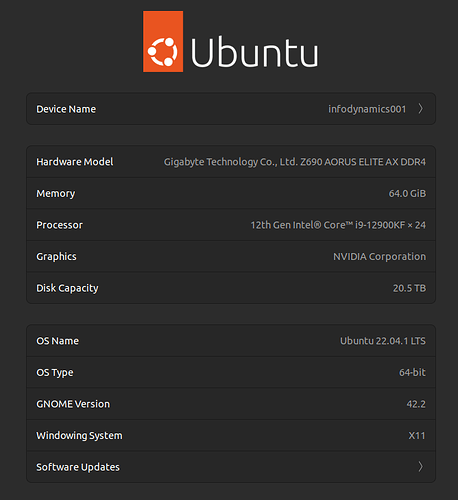

params: Dict[str, Any] = {'weight_precision': torch.float64,

'sindy_precision': torch.float64,

'target_device': 'cuda'}

sindy_autoencoder = torch.load('saved_model.pkl')

sindy_autoencoder.to(params['target_device'])

# this is a version of the 'problem' function in torch.

def calculate_first_and_second_derivative_with_torch(input_and_derivatives, stack):

x, dx, ddx = input_and_derivatives

layer_count = len(stack)

for i in range(layer_count - 1):

x = torch.mm(x, stack[i].weights) + stack[i].bias

x = torch.sigmoid(x)

dx_prev = torch.mm(dx, stack[i].weights)

sigmoid_first_derivative = torch.mul(x, 1 - x)

sigmoid_second_derivative = torch.mul(sigmoid_first_derivative, 1 - 2 * x)

dx = torch.mul(sigmoid_first_derivative, dx_prev)

ddx = torch.mul(sigmoid_second_derivative, torch.square(dx_prev)) \

+ torch.mul(sigmoid_first_derivative, torch.mm(ddx, stack[i].weights))

dx = torch.mm(dx, stack[layer_count - 1].weights)

ddx = torch.mm(ddx, stack[layer_count - 1].weights)

return dx, ddx

# this is the equivalent 'problem' function in numpy.

def calculate_first_and_second_derivative_with_np(input, dx, ddx, weights, biases):

dz = dx

ddz = ddx

def sigmoid(x):

return 1 / (1 + np.exp(-x))

for i in range(len(weights) - 1):

input = np.matmul(input, weights[i]) + biases[i]

input = sigmoid(input)

dz_prev = np.matmul(dz, weights[i])

sigmoid_derivative = np.multiply(input, 1 - input)

sigmoid_derivative2 = np.multiply(sigmoid_derivative, 1 - 2 * input)

dz = np.multiply(sigmoid_derivative, dz_prev)

ddz = np.multiply(sigmoid_derivative2, np.square(dz_prev)) \

+ np.multiply(sigmoid_derivative, np.matmul(ddz, weights[i]))

dz = np.matmul(dz, weights[-1])

ddz = np.matmul(ddz, weights[-1])

return dz, ddz

dx_decode_np_test, ddx_decode_np_test = \

calculate_first_and_second_derivative_with_np(

recovered_autoencoder_network['v2_in_z'],

recovered_autoencoder_network['v2_in_dz'],

recovered_autoencoder_network['v2_in_sindy_predict'],

recovered_autoencoder_network['v2_in_decoder_weights'],

recovered_autoencoder_network['v2_in_decoder_biases'])

# Here I access the tensors recovered from the saved Tensorflow model and convert them to torch.

converted_stack = [torch.tensor(recovered_autoencoder_network['v2_in_z'],

device=torch.device(params['target_device']),

dtype=params['sindy_precision']),

torch.tensor(recovered_autoencoder_network['v2_in_dz'],

device=torch.device(params['target_device']),

dtype=params['sindy_precision']),

torch.tensor(recovered_autoencoder_network['v2_in_sindy_predict'],

device=torch.device(params['target_device']),

dtype=params['sindy_precision'])]

# Here I use the tensors captured from the tensorflow model (converted to torch)

# with the torch version of the function and the layers from the model.

dx_decode_torch_test, ddx_decode_torch_test = \

calculate_first_and_second_derivative_with_torch(converted_stack,

sindy_autoencoder.ψ_decoder_to_x)

# Here I show the error between the two functions.

print(dx_decode_np_test - dx_decode_torch_test, ddx_decode_np_test - ddx_decode_torch_test)

# Here I show that the Torch weights in the model feeding the Torch

# function are equivalent to the Numpy arrays feeding the Numpy function.

# (the weights were initialized from those arrays after conversion to Torch.tensor.)

print(("\n\nWeight and bias comparison for two models (imported from np source)\n\n" +

"weights comparison: \nl1 {:.5f} ({:.2%})\nl2 {:.5f} ({:.2%})\nl3 {:.5f} ({:.2%})\nl4 {:.5f} ({:.2%})\n\n" +

"bias comparison: \nb1 {:.5f} ({:.2%})\nb2 {:.5f} ({:.2%})\nb3 {:.5f} ({:.2%})\nb4 {:.5f} ({:.2%}))")

.format(np.sum(sindy_autoencoder.ψ_decoder_to_x[0].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][0]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[0].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][0]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_weights'][0]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[1].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][1])),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[1].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][1]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_weights'][1]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[2].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][2])),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[2].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][2]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_weights'][2]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[3].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][3])),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[3].weights.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_weights'][3]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_weights'][3]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[0].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][0])),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[0].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][0]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_biases'][0]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[1].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][1])),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[1].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][1]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_biases'][1]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[2].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][2])),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[2].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][2]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_biases'][2]),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[3].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][3])),

(np.sum(sindy_autoencoder.ψ_decoder_to_x[3].bias.cpu().detach().numpy()

- recovered_autoencoder_network['v2_in_decoder_biases'][3]))

/ np.sum(recovered_autoencoder_network['v2_in_decoder_biases'][3])))