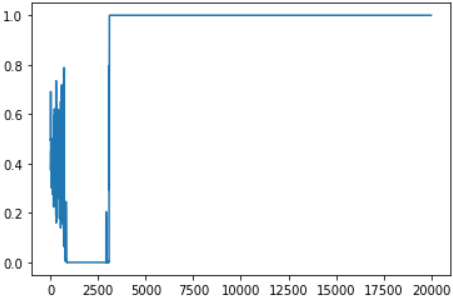

The code is for Wasserstein GAN. For some weird reason the loss plot looks eerily similar to step function.

import os

import numpy as np

import math

import sys

import torchvision

import torchvision.transforms as transforms

from torchvision.utils import save_image

from torch.utils.data import DataLoader

from torchvision import datasets

from torch.autograd import Variable

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.nn.functional as F

import torch

-----------------------------------------------

print('==> Preparing data..')

#Just performing some transfomations on the image

transform_train = transforms.Compose([

transforms.ToTensor()

])

# So we need CIFAR-10 dataset , pytorch provides it!

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform_train)

dataloader = torch.utils.data.DataLoader(trainset, batch_size=50, shuffle=True, num_workers=2)

--------------------------------------------

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

#-----------------

num_epochs = 100

batch_size = 64

lr = 0.00005

latent_dim = 100

img_size = 28

channels = 1

clip_value = 0.01

sample_interval = 400

n_critic = 5

--------------------------------------

num_batches = len(dataloader)

rand_num = 9

cuda = True if torch.cuda.is_available() else False

Tensor = torch.cuda.FloatTensor if cuda else torch.FloatTensor

num_batches

------------------------------------------

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Linear(100, 4*4*256),

nn.LeakyReLU()

)

self.cnn = nn.Sequential(

nn.ConvTranspose2d(256, 128, 3, stride=2, padding=0, output_padding=0),

nn.LeakyReLU(),

nn.ConvTranspose2d(128, 64, 3, stride=2, padding=1, output_padding=0),

nn.LeakyReLU(),

nn.ConvTranspose2d(64, 64, 3, stride=2, padding=2, output_padding=1),

nn.LeakyReLU(),

nn.Conv2d(64, 3, 3, stride=1, padding=1),

nn.Tanh()

)

def forward(self, z):

x = self.model(z)

x = x.view(-1, 256, 4, 4)

x = self.cnn(x)

return x

-------------------------------------------

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.cnn = nn.Sequential(

nn.Conv2d(3, 64, 3, stride=1, padding=1),

nn.LeakyReLU(),

nn.MaxPool2d(2, 2),

nn.Conv2d(64, 128, 3, stride=1, padding=1),

nn.LeakyReLU(),

nn.MaxPool2d(2, 2),

nn.Conv2d(128, 256, 3, stride=1, padding=1),

nn.LeakyReLU(),

nn.MaxPool2d(2, 2)

)

self.fc = nn.Sequential(

nn.Linear(4*4*256, 128),

nn.LeakyReLU(),

nn.Dropout(0.5),

nn.Linear(128, 1),

nn.Sigmoid()

)

def forward(self, x):

x = self.cnn(x)

x = x.view(-1, 4*4*256)

x = self.fc(x)

return x

-------------------------------------------------

discriminator = Discriminator()

generator = Generator()

if cuda:

generator.cuda()

discriminator.cuda()

# The classification loss of Discriminator, binary classification, 1 -> real sample, 0 -> fake sample

criterion = nn.BCELoss()

# Define optimizers

# Optimizers

optimizer_G = torch.optim.RMSprop(generator.parameters(), lr=lr)

optimizer_D = torch.optim.RMSprop(discriminator.parameters(), lr=lr)

# Draw 9 samples from the input distribution as a fixed test set

# Can follow how the generator output evolves

rand_z = Variable(torch.randn(rand_num, 100))

-----------------------------------------------------------------------------------

epochs= []

G_Losses = []

D_Losses = []

-------------------------

# ----------

# Training

# ----------

batches_done = 0

for epoch in range(num_epochs):

for i, (imgs, _) in enumerate(dataloader):

# Configure input

real_imgs = Variable(imgs.type(Tensor))

# ---------------------

# Train Discriminator

# ---------------------

optimizer_D.zero_grad()

# Sample noise as generator input

z = Variable(Tensor(np.random.normal(0, 1, (imgs.shape[0], latent_dim))))

# Generate a batch of images

fake_imgs = generator(z).detach()

# Adversarial loss

loss_D = -torch.mean(discriminator(real_imgs)) + torch.mean(discriminator(fake_imgs))

loss_D.backward()

optimizer_D.step()

# Clip weights of discriminator

for p in discriminator.parameters():

p.data.clamp_(-clip_value, clip_value)

# Train the generator every n_critic iterations

if i % n_critic == 0:

# -----------------

# Train Generator

# -----------------

optimizer_G.zero_grad()

# Generate a batch of images

gen_imgs = generator(z)

# Adversarial loss

loss_G = -torch.mean(discriminator(gen_imgs))

loss_G.backward()

optimizer_G.step()

epochs.append(epoch + i/len(dataloader))

G_Losses.append(-loss_G.item()) # Negative because the loss is actually maximized in WGAN.

D_Losses.append(-loss_D.item())

print(

"[Epoch %d/%d] [Batch %d/%d] [D loss: %f] [G loss: %f]"

% (epoch, num_epochs, batches_done % len(dataloader), len(dataloader), loss_D.item(), loss_G.item())

)

if batches_done % sample_interval == 0:

save_image(gen_imgs.data[:25], "Wasserstein_cifar10/%d.png" % batches_done, nrow=5, normalize=True)

batches_done += 1

-------------------------------------------------------