Hi,everyone!

I running my code on cuda, but want to calculate losses on cpu. But i met strange error

Running code:

def do_train(cfg,

model,

data_loader,

class_weights,

optimizer,

scheduler,

checkpointer,

device,

arguments,

args):

np.set_printoptions(precision=3)

np.set_printoptions(suppress=True)

logger = logging.getLogger("CORE")

logger.info("Start training ...")

# Set model to train mode

model.train()

# Create tensorboard writer

save_to_disk = dist_util.is_main_process()

if args.use_tensorboard and save_to_disk:

summary_writer = SummaryWriter(log_dir=os.path.join(cfg.OUTPUT_DIR, 'tf_logs'))

else:

summary_writer = None

# Prepare to train

iters_per_epoch = len(data_loader)

total_steps = iters_per_epoch * cfg.SOLVER.MAX_ITER

start_epoch = arguments["epoch"]

logger.info("Iterations per epoch: {0}. Total steps: {1}. Start epoch: {2}".format(iters_per_epoch, total_steps, start_epoch))

# Create losses

if class_weights is None:

cross_entropy_loss = torch.nn.CrossEntropyLoss(reduction='none')

else:

cross_entropy_loss = torch.nn.CrossEntropyLoss(reduction='none', weight=torch.tensor(class_weights, dtype=torch.float, device=cfg.MODEL.DEVICE))

criterion_jaccard = mylosses.JaccardIndex(reduction=False)

criterion_dice = mylosses.DiceLoss(reduction=False)

# Create additional transforms

unstandardize = UnStandardize()

toCVimage = ToCV2Image()

totensor = ToTensor()

# Epoch loop

for epoch in range(start_epoch, cfg.SOLVER.MAX_ITER):

arguments["epoch"] = epoch + 1

# Create progress bar

print(('\n' + '%10s' * 6) % ('Epoch', 'gpu_mem', 'lr', 'loss', 'jaccard', 'dice'))

pbar = enumerate(data_loader)

pbar = tqdm(pbar, total=len(data_loader))

# Prepare data for tensorboard

best_samples, worst_samples = [], []

# Iteration loop

loss_sum, jaccard_loss_sum, dice_loss_sum = 0.0, 0.0, 0.0

for iteration, data_entry in pbar:

global_step = epoch * iters_per_epoch + iteration

images, labels, masks = data_entry

# Forward data to GPU

# images = images.to(device)

images = images.to(device)

targets = labels.to(device)

masks = masks.to(device)

# Do prediction

outputs = model(images)

# Calculate loss

losses = cross_entropy_loss.forward(outputs, targets.type(torch.long))

# Apply mask

losses = losses * masks

# Calculate metrics

outputs_classified = torch.softmax(outputs, dim=1).argmax(dim=1)

outputs_classes, target_classes = [], []

for class_id in range(outputs.shape[1]):

if class_id == 0: # Skip background

continue

outputs_classes.append(torch.where(outputs_classified == class_id, float(class_id), 0.0))

target_classes.append(torch.where(targets == class_id, float(class_id), 0.0))

# jaccard_losses = criterion_jaccard.forward(torch.stack(outputs_classes, dim=1), torch.stack(target_classes, dim=1), masks)

dice_losses = criterion_dice.forward(torch.stack(outputs_classes, dim=1), torch.stack(target_classes, dim=1), masks)

################### Best images

if cfg.TENSORBOARD.BEST_SAMPLES_NUM > 0:

with torch.no_grad():

losses_ = dice_losses.detach().clone()

# Select only non-empty labels

cnz = torch.count_nonzero(labels, dim=(1,2))

idxs = torch.tensor([i for i,v in enumerate(cnz) if v > 0])

if not torch.numel(idxs):

continue

# Find best metric

losses_selected = torch.index_select(losses_.to('cpu'), 0, idxs)

# losses_selected = torch.index_select(losses_.to('cpu'), 0, idxs)

losses_per_image = torch.mean(losses_selected, dim=(1))

max_idx = torch.argmax(losses_per_image).item()

best_loss = losses_per_image[max_idx].item()

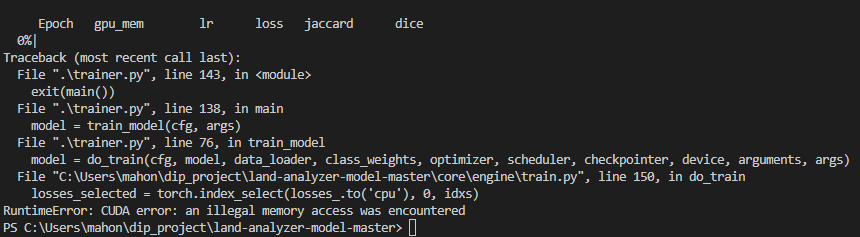

Error:

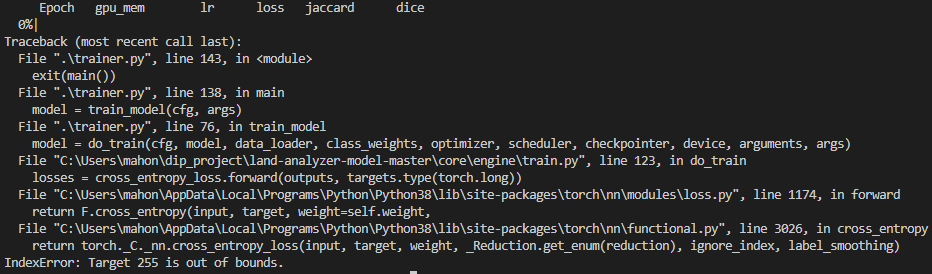

When i tried to run on cpu, the error was different. Gives the report, that mistake is here:

# Calculate loss

losses = cross_entropy_loss.forward(outputs, targets.type(torch.long))

Error:

I’m stucked in this problem. Does anyone have any idea about this situation?

Thanks for any suggestion.

Some information:

OS: Windows 10

GPU: Nvidia 3060

Driver Version: 528.33

Python Version: 3.8

cuda Version: 12.0

torch Version: 1.13.1+cu117