Could you describe the issue and use case a bit more?

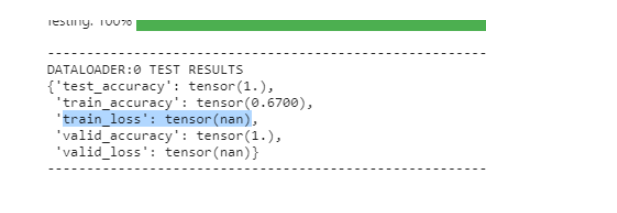

I.e. in particular it would be interesting so see, if the loss value was invalid before finishing the training, what loss function you are using etc.

i figured out . The reason was keeping null values, therefore learning rate was set to zero