I have the below classifier model and i want to pass in my input which has 39 features to it as

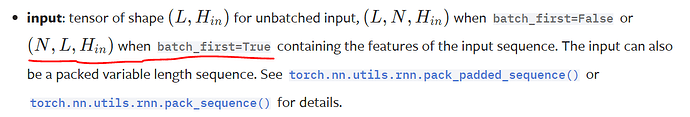

Shape: torch .rand(1, 1,39) and it works but according to the documentation it should be (1, 39, hidden size).

Why is this so in this case?

How can i choose the hidden size of the LSTM based on?

class SequenceModel(nn.Module):

def __init__(self, n_features, n_classes=5, n_hidden=256, n_layers=3):

super().__init__()

self.lstm = torch.nn.LSTM(input_size=n_features,

hidden_size=n_hidden,

num_layers=n_layers,

batch_first=True,

dropout=0.3)

self.classifier = torch.nn.Linear(n_hidden, n_classes)

def forward(self, x):

self.lstm.flatten_parameters()

_, (hidden, _) = self.lstm(x)

out = hidden[-1] # get the last state of the last layer

return self.classifier(out)

#Print smaple output

c = torch.nn.CrossEntropyLoss()

sample = next(iter(train_loader))

out = SequenceModel(39, 5)(sample["sequence"])

# print("Model output:", out.cpu().detach().numpy()) # Change batch size

print("loss:", c(out, sample["label"]))