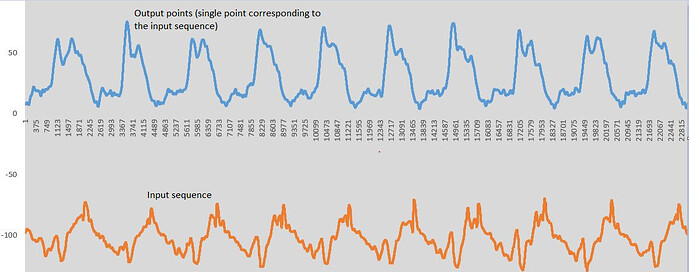

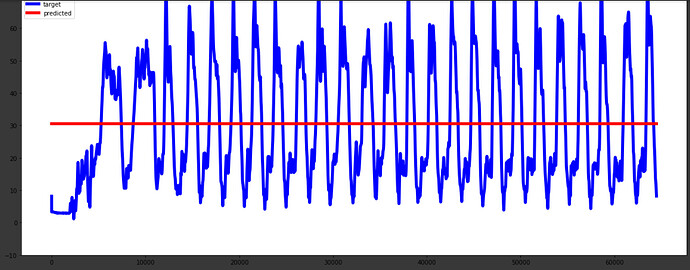

I am using LSTM network for a periodic data which includes training input sequence of angular data and outputs a value corresponding to this sequence. The output is not similar to the input but is related to the input sequence in some manner. The network learns a horizontal line which is just the average value for the entire output values. I have tried most of the things I could: varying the batch size, trying different learning rates, changing the hidden size, different optimizers, different sequence lengths(10-400) and scaling the data.

After printing the weights (before and after training) and gradient values, I observed that the weights do change with some of the values remaining same and the gradient values become very small as the training proceeds ultimately leading to a flat line.

Here the network architecture, it is very simple.

# Neural Net Class

class LSTM(nn.Module):

def __init__(self, num_classes=1, input_size=1, hidden_size=2, num_layers=1, seq_length=40):

super(LSTM, self).__init__()

self.num_classes = num_classes

self.num_layers = num_layers

self.input_size = input_size

self.hidden_size = hidden_size

self.seq_length = seq_length

self.lstm = nn.LSTM(input_size=input_size, hidden_size=hidden_size,

num_layers=num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, num_classes)

def forward(self, x):

h_0 = Variable(torch.zeros(

self.num_layers, x.size(0), self.hidden_size))

c_0 = Variable(torch.zeros(

self.num_layers, x.size(0), self.hidden_size))

# Propagate input through LSTM

ou, (h_out, _) = self.lstm(x, (h_0, c_0))

h_out = h_out.view(-1, self.hidden_size)

out = self.fc(h_out)

return out

net = LSTM(num_classes, input_size, hidden_size, num_layers, seq_length) # Neural Net

criterion = torch.nn.MSELoss() # Loss Criterion

optimizer = torch.optim.Adam(net.parameters(), lr=learning_rate) # Optimizer

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=20, gamma=0.6) # lr scheduler

# Training function

def train(epoch):

loss_batch = 0

for batch_idx, (inputs, targets) in enumerate(trainloader):

# print(inputs.shape)

# print(targets.shape)

inputs = torch.squeeze(inputs,0)

optimizer.zero_grad()

out = net(inputs)

loss = criterion(out, targets)

loss.backward()

# print(net.lstm.weight_hh_l0.grad)

optimizer.step()

scheduler.step()

loss_batch += loss.item()

return loss_batch

# Training loop

for epoch in range(0, epochs):

loss = train(epoch)

if (epoch%2 == 0):

print(loss)

This is the output which is just a flat line:

The data-points have been collected at very high frequency and adjacent values in the data differ only by 0.01 utmost and consecutive values can also be repeated in the sequence.

Is there anything that I am doing wrong with the network or the kind of architecture I am using? Should I try some other type of neural network or loss function?