Dear,

I’m going to use Mask rcnn inference demo with pre-trained model using image of COCO dataset

I’m following this : GitHub - multimodallearning/pytorch-mask-rcnn

Replace C++ embeding python. NMS and ROIalign

by torchvision.ops.nms() and torchvision.ops.roi_align()

My config is : python 3.8 torch 1.9.1+cu111, torchvision 0.10.1+cu111

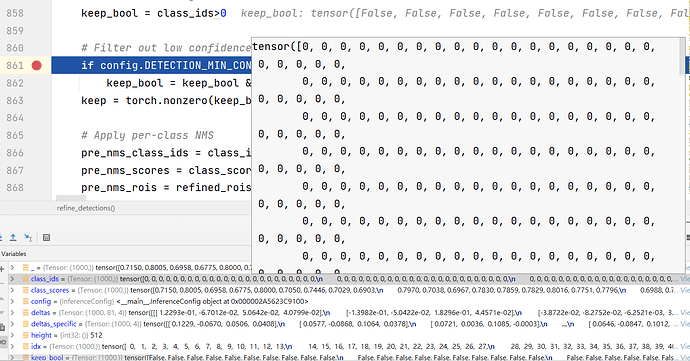

In the “FPN Head classifier” detect ROI mrcnn probalitity always is background

So next step that refine the ROI,

class probability of the top class of each ROI, filter out background boxes is error

because all class probability are background

The error code is :

D:\Ru\pytorch-mask-rcnn\test\model_1028.py:1687: UserWarning: volatile was removed and now has no effect. Use with torch.no_grad(): instead.

molded_images = Variable(molded_images, volatile=True)

C:\Users\admin\anaconda3\envs\torch\lib\site-packages\torch\nn\functional.py:3487: UserWarning: nn.functional.upsample is deprecated. Use nn.functional.interpolate instead.

warnings.warn(“nn.functional.upsample is deprecated. Use nn.functional.interpolate instead.”)

Traceback (most recent call last):

File “D:/Ru/pytorch-mask-rcnn/test/demo_1028.py”, line 99, in

results = model.detect([image])

File “D:\Ru\pytorch-mask-rcnn\test\model_1028.py”, line 1690, in detect

detections, mrcnn_mask = self.predict([molded_images, image_metas], mode=‘inference’)

File “D:\Ru\pytorch-mask-rcnn\test\model_1028.py”, line 1766, in predict

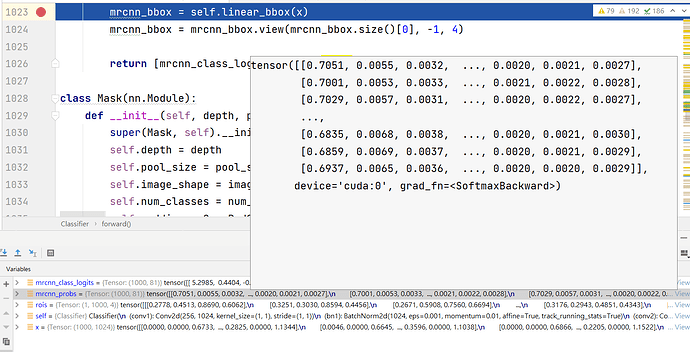

detections = detection_layer(self.config, rpn_rois, mrcnn_class, mrcnn_bbox, image_metas)

File “D:\Ru\pytorch-mask-rcnn\test\model_1028.py”, line 919, in detection_layer

detections = refine_detections(rois, mrcnn_class, mrcnn_bbox, window, config)

File “D:\Ru\pytorch-mask-rcnn\test\model_1028.py”, line 890, in refine_detections

keep = intersect1d(keep, nms_keep)

UnboundLocalError: local variable ‘nms_keep’ referenced before assignment

What do you think? What’s happening?