When I am using my model if I go 1 gpu and batch 1 it runs okay,

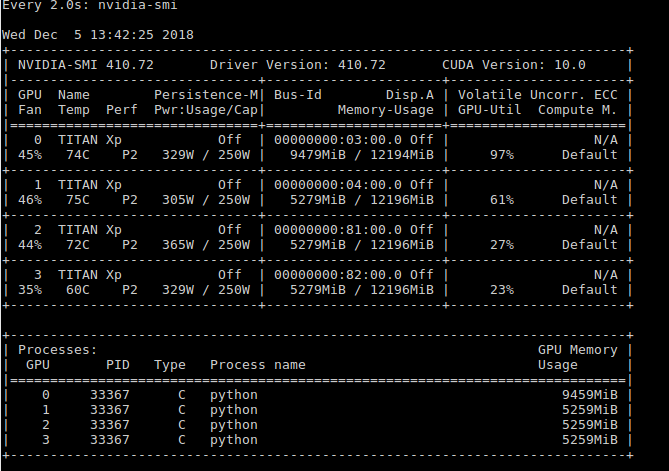

but when i use dataparallel and use 4 gpus and batch size 4, it becomes crazy slow and also i notirce that the memory between the 4 gpus is distributed unequally (the first gpu uses way more).

can anyone please help me how should i fix this issue or how should i look for the problem? ![]()