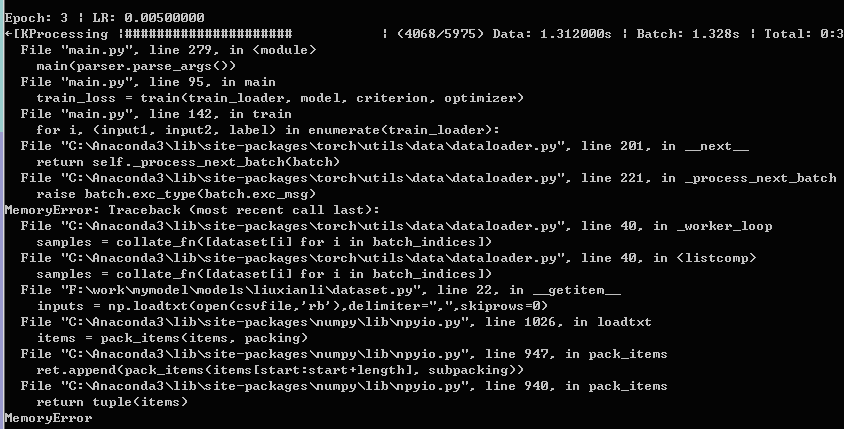

The memory consumption increases while training,it ran a few times to the third epoch reported MemoryError.And my train dataset is stored in the TrainData folder by .csv type.The inputs of the net is a pair of samples and the corresponding label.

the error :

my dataset.py as follows:

dataset.py

import torch

import numpy as np

from torch.utils.data import Dataset

import pandas

class Mydataset(Dataset):

def init(self, csvlist):

self.csvlist = csvlist

def __len__(self):

return len(self.csvlist)

def __getitem__(self,idx):

csvfile = self.csvlist[idx]

inputs = np.loadtxt(open(csvfile,'rb'),delimiter=",",skiprows=0)

inputs = torch.Tensor(inputs)

input1 = inputs[:35937].view(1,33,33,33)

input2 = inputs[35937:-1].view(1,33,33,33)

label = torch.zeros(1)

label[0] = inputs[-1]

return input1, input2, label