Hi!

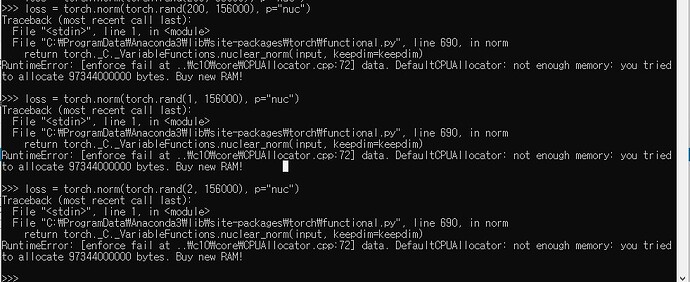

I’m dealing with the nuclear norm, torch.norm(…, p=“nuc”).

I know that I’m using very large matrix, but why the required memory doesn’t change where the first dimension size changes??

The simple code result image is as below.

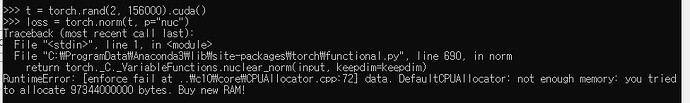

Also, while I’m using tensor at GPU using CUDA, I think that calculating the nuclear norm is only available on CPU, is it right???