I’m trying to quantize a mobilenetv2 + SSDLite model from https://github.com/qfgaohao/pytorch-ssd

I followed the tutorial here https://pytorch.org/tutorials/advanced/static_quantization_tutorial.html doing Post-training static quantization

Before quantizing the model definition looks like this

SSD(

(base_net): Sequential(

(0): Sequential(

(0): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU6(inplace=True)

)

(1): InvertedResidual(

(conv): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=32, bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU6(inplace=True)

(3): Conv2d(32, 16, kernel_size=(1, 1), stride=(1, 1), bias=False)

(4): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): InvertedResidual(

(conv): Sequential(

(0): Conv2d(16, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU6(inplace=True)

(3): Conv2d(96, 96, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=96, bias=False)

(4): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU6(inplace=True)

(6): Conv2d(96, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

**#Removed some stuff to stay under 32K characters**

(5): Conv2d(64, 24, kernel_size=(1, 1), stride=(1, 1))

)

(source_layer_add_ons): ModuleList()

)

Quantization is done using :

model.eval().to('cpu')

model.fuse_model()

model.qconfig = torch.quantization.get_default_qconfig('fbgemm')

torch.quantization.prepare(model, inplace=True)

torch.quantization.convert(model, inplace=True)

After quantization the model definition looks like this :

SSD(

(base_net): Sequential(

(0): Sequential(

(0): QuantizedConv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), scale=1.0, zero_point=0, padding=(1, 1), bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): QuantizedReLU6(inplace=True)

)

(1): InvertedResidual(

(conv): Sequential(

(0): QuantizedConv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), scale=1.0, zero_point=0, padding=(1, 1), groups=32, bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): QuantizedReLU6(inplace=True)

(3): QuantizedConv2d(32, 16, kernel_size=(1, 1), stride=(1, 1), scale=1.0, zero_point=0, bias=False)

(4): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): InvertedResidual(

(conv): Sequential(

(0): QuantizedConv2d(16, 96, kernel_size=(1, 1), stride=(1, 1), scale=1.0, zero_point=0, bias=False)

(1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): QuantizedReLU6(inplace=True)

(3): QuantizedConv2d(96, 96, kernel_size=(3, 3), stride=(2, 2), scale=1.0, zero_point=0, padding=(1, 1), groups=96, bias=False)

(4): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): QuantizedReLU6(inplace=True)

(6): QuantizedConv2d(96, 24, kernel_size=(1, 1), stride=(1, 1), scale=1.0, zero_point=0, bias=False)

(7): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

**#Removed some stuff to stay under 32K characters**

(5): QuantizedConv2d(64, 24, kernel_size=(1, 1), stride=(1, 1), scale=1.0, zero_point=0)

)

(source_layer_add_ons): ModuleList()

)

Model size decreased from 14MB to 4MB.

but with this new definition how can i load the quantized model ?

I’m trying the following & getting the below error

#Saving

torch.save(q_model.state_dict(), project.quantized_trained_model_dir / file_name)

#Loading the saved quatized model

lq_model = create_mobilenetv2_ssd_lite(len(class_names), is_test=True)

lq_model.load(project.quantized_trained_model_dir / file_name)

#Error

RuntimeError: Error(s) in loading state_dict for SSD:

Unexpected key(s) in state_dict: "base_net.0.0.scale", "base_net.0.0.zero_point", "base_net.0.0.bias", "base_net.1.conv.0.scale", "base_net.1.conv.0.zero_point", "base_net.1.conv.0.bias", "base_net.1.conv.3.scale", "base_net.1.conv.3.zero_point", "base_net.1.conv.3.bias", "base_net.2.conv.0.scale"...

I do understand that after quantization some layers are changed Conv2d -> QuantizedConv2d but does that mean that I have to have 2 model definitions for original & quantized versions?

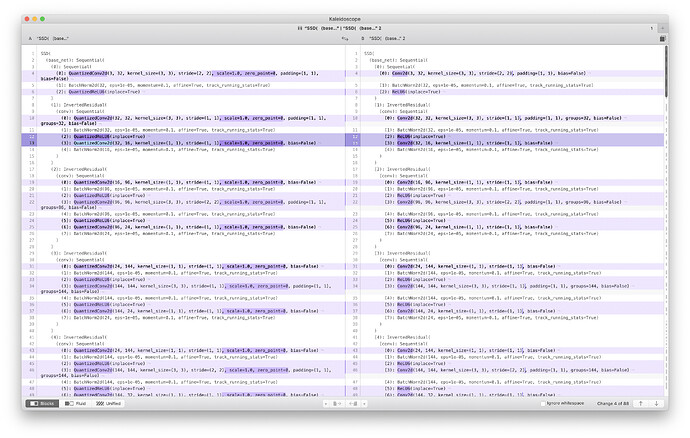

This a diff of the definitions