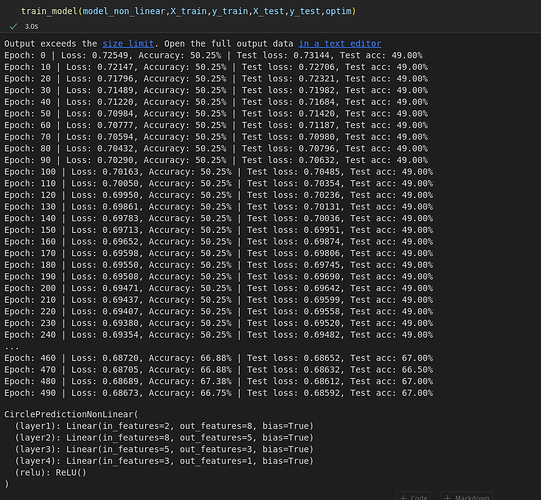

This is the second call results

train_model(model_seq_non_linear,X_train,y_train,X_test,y_test,optim_seq)

the output

Output exceeds the size limit. Open the full output data in a text editorEpoch: 0 | Loss: 0.68657, Accuracy: 66.62% | Test loss: 0.68570, Test acc: 66.50%

Epoch: 10 | Loss: 0.68640, Accuracy: 67.25% | Test loss: 0.68548, Test acc: 67.50%

Epoch: 20 | Loss: 0.68622, Accuracy: 67.00% | Test loss: 0.68525, Test acc: 66.00%

Epoch: 30 | Loss: 0.68604, Accuracy: 66.62% | Test loss: 0.68502, Test acc: 67.00%

Epoch: 40 | Loss: 0.68586, Accuracy: 65.50% | Test loss: 0.68478, Test acc: 66.50%

Epoch: 50 | Loss: 0.68567, Accuracy: 65.50% | Test loss: 0.68454, Test acc: 66.50%

Epoch: 60 | Loss: 0.68547, Accuracy: 64.75% | Test loss: 0.68430, Test acc: 66.00%

Epoch: 70 | Loss: 0.68527, Accuracy: 64.50% | Test loss: 0.68406, Test acc: 66.50%

Epoch: 80 | Loss: 0.68507, Accuracy: 63.88% | Test loss: 0.68382, Test acc: 65.50%

Epoch: 90 | Loss: 0.68486, Accuracy: 63.88% | Test loss: 0.68359, Test acc: 66.50%

Epoch: 100 | Loss: 0.68466, Accuracy: 63.88% | Test loss: 0.68335, Test acc: 67.00%

Epoch: 110 | Loss: 0.68445, Accuracy: 63.75% | Test loss: 0.68312, Test acc: 67.00%

Epoch: 120 | Loss: 0.68424, Accuracy: 63.62% | Test loss: 0.68289, Test acc: 67.00%

Epoch: 130 | Loss: 0.68402, Accuracy: 63.62% | Test loss: 0.68266, Test acc: 67.00%

Epoch: 140 | Loss: 0.68380, Accuracy: 63.62% | Test loss: 0.68242, Test acc: 67.50%

Epoch: 150 | Loss: 0.68358, Accuracy: 63.62% | Test loss: 0.68218, Test acc: 68.00%

Epoch: 160 | Loss: 0.68336, Accuracy: 63.75% | Test loss: 0.68194, Test acc: 68.00%

Epoch: 170 | Loss: 0.68312, Accuracy: 64.00% | Test loss: 0.68169, Test acc: 68.00%

Epoch: 180 | Loss: 0.68289, Accuracy: 64.12% | Test loss: 0.68144, Test acc: 68.00%

Epoch: 190 | Loss: 0.68265, Accuracy: 64.50% | Test loss: 0.68118, Test acc: 68.00%

Epoch: 200 | Loss: 0.68241, Accuracy: 64.50% | Test loss: 0.68093, Test acc: 68.00%

Epoch: 210 | Loss: 0.68216, Accuracy: 64.62% | Test loss: 0.68066, Test acc: 68.50%

Epoch: 220 | Loss: 0.68190, Accuracy: 64.62% | Test loss: 0.68039, Test acc: 68.50%

Epoch: 230 | Loss: 0.68164, Accuracy: 65.00% | Test loss: 0.68011, Test acc: 68.00%

Epoch: 240 | Loss: 0.68138, Accuracy: 65.25% | Test loss: 0.67982, Test acc: 68.00%

...

Epoch: 460 | Loss: 0.67342, Accuracy: 69.38% | Test loss: 0.67123, Test acc: 72.00%

Epoch: 470 | Loss: 0.67293, Accuracy: 69.62% | Test loss: 0.67070, Test acc: 72.00%

Epoch: 480 | Loss: 0.67242, Accuracy: 69.62% | Test loss: 0.67016, Test acc: 72.50%

Epoch: 490 | Loss: 0.67190, Accuracy: 69.75% | Test loss: 0.66961, Test acc: 72.50%

CirclePredictionNonLinear(

(layer1): Linear(in_features=2, out_features=8, bias=True)

(layer2): Linear(in_features=8, out_features=5, bias=True)

(layer3): Linear(in_features=5, out_features=3, bias=True)

(layer4): Linear(in_features=3, out_features=1, bias=True)

(relu): ReLU()

)

The third time I call the same line

Output exceeds the size limit. Open the full output data in a text editorEpoch: 0 | Loss: 0.67136, Accuracy: 70.12% | Test loss: 0.66903, Test acc: 72.50%

Epoch: 10 | Loss: 0.67080, Accuracy: 70.38% | Test loss: 0.66844, Test acc: 72.50%

Epoch: 20 | Loss: 0.67023, Accuracy: 70.50% | Test loss: 0.66784, Test acc: 72.50%

Epoch: 30 | Loss: 0.66964, Accuracy: 70.50% | Test loss: 0.66721, Test acc: 72.50%

Epoch: 40 | Loss: 0.66903, Accuracy: 70.50% | Test loss: 0.66657, Test acc: 72.50%

Epoch: 50 | Loss: 0.66840, Accuracy: 70.62% | Test loss: 0.66590, Test acc: 72.50%

Epoch: 60 | Loss: 0.66775, Accuracy: 71.00% | Test loss: 0.66522, Test acc: 73.00%

Epoch: 70 | Loss: 0.66708, Accuracy: 71.12% | Test loss: 0.66451, Test acc: 73.00%

Epoch: 80 | Loss: 0.66639, Accuracy: 71.38% | Test loss: 0.66377, Test acc: 73.50%

Epoch: 90 | Loss: 0.66567, Accuracy: 71.50% | Test loss: 0.66302, Test acc: 74.00%

Epoch: 100 | Loss: 0.66493, Accuracy: 71.75% | Test loss: 0.66225, Test acc: 74.00%

Epoch: 110 | Loss: 0.66417, Accuracy: 72.12% | Test loss: 0.66146, Test acc: 74.00%

Epoch: 120 | Loss: 0.66338, Accuracy: 72.38% | Test loss: 0.66064, Test acc: 74.00%

Epoch: 130 | Loss: 0.66256, Accuracy: 72.62% | Test loss: 0.65979, Test acc: 74.50%

Epoch: 140 | Loss: 0.66172, Accuracy: 72.75% | Test loss: 0.65891, Test acc: 75.50%

Epoch: 150 | Loss: 0.66086, Accuracy: 72.75% | Test loss: 0.65800, Test acc: 75.50%

Epoch: 160 | Loss: 0.65996, Accuracy: 73.00% | Test loss: 0.65706, Test acc: 75.50%

Epoch: 170 | Loss: 0.65904, Accuracy: 73.12% | Test loss: 0.65609, Test acc: 75.50%

Epoch: 180 | Loss: 0.65809, Accuracy: 73.25% | Test loss: 0.65509, Test acc: 75.50%

Epoch: 190 | Loss: 0.65711, Accuracy: 73.38% | Test loss: 0.65405, Test acc: 75.50%

Epoch: 200 | Loss: 0.65610, Accuracy: 73.62% | Test loss: 0.65300, Test acc: 76.00%

Epoch: 210 | Loss: 0.65505, Accuracy: 73.88% | Test loss: 0.65191, Test acc: 76.50%

Epoch: 220 | Loss: 0.65398, Accuracy: 74.00% | Test loss: 0.65079, Test acc: 76.50%

Epoch: 230 | Loss: 0.65286, Accuracy: 74.25% | Test loss: 0.64963, Test acc: 76.50%

Epoch: 240 | Loss: 0.65170, Accuracy: 74.25% | Test loss: 0.64844, Test acc: 76.50%

...

Epoch: 460 | Loss: 0.61325, Accuracy: 78.88% | Test loss: 0.60937, Test acc: 80.00%

Epoch: 470 | Loss: 0.61072, Accuracy: 79.00% | Test loss: 0.60683, Test acc: 80.00%

Epoch: 480 | Loss: 0.60810, Accuracy: 79.62% | Test loss: 0.60423, Test acc: 80.00%

Epoch: 490 | Loss: 0.60540, Accuracy: 80.00% | Test loss: 0.60157, Test acc: 80.00%

CirclePredictionNonLinear(

(layer1): Linear(in_features=2, out_features=8, bias=True)

(layer2): Linear(in_features=8, out_features=5, bias=True)

(layer3): Linear(in_features=5, out_features=3, bias=True)

(layer4): Linear(in_features=3, out_features=1, bias=True)

(relu): ReLU()

)

When I call it fourth time

Output exceeds the size limit. Open the full output data in a text editorEpoch: 0 | Loss: 0.60262, Accuracy: 80.12% | Test loss: 0.59881, Test acc: 80.00%

Epoch: 10 | Loss: 0.59973, Accuracy: 80.38% | Test loss: 0.59598, Test acc: 80.00%

Epoch: 20 | Loss: 0.59673, Accuracy: 80.75% | Test loss: 0.59307, Test acc: 80.00%

Epoch: 30 | Loss: 0.59366, Accuracy: 80.88% | Test loss: 0.59006, Test acc: 81.00%

Epoch: 40 | Loss: 0.59051, Accuracy: 81.00% | Test loss: 0.58695, Test acc: 81.00%

Epoch: 50 | Loss: 0.58726, Accuracy: 81.25% | Test loss: 0.58374, Test acc: 80.50%

Epoch: 60 | Loss: 0.58390, Accuracy: 81.00% | Test loss: 0.58047, Test acc: 80.50%

Epoch: 70 | Loss: 0.58043, Accuracy: 81.25% | Test loss: 0.57710, Test acc: 81.00%

Epoch: 80 | Loss: 0.57684, Accuracy: 81.62% | Test loss: 0.57362, Test acc: 81.00%

Epoch: 90 | Loss: 0.57312, Accuracy: 81.62% | Test loss: 0.57002, Test acc: 81.50%

Epoch: 100 | Loss: 0.56927, Accuracy: 81.75% | Test loss: 0.56630, Test acc: 82.50%

Epoch: 110 | Loss: 0.56530, Accuracy: 82.38% | Test loss: 0.56247, Test acc: 83.50%

Epoch: 120 | Loss: 0.56117, Accuracy: 82.88% | Test loss: 0.55849, Test acc: 83.50%

Epoch: 130 | Loss: 0.55691, Accuracy: 83.25% | Test loss: 0.55437, Test acc: 83.50%

Epoch: 140 | Loss: 0.55255, Accuracy: 83.12% | Test loss: 0.55018, Test acc: 83.50%

Epoch: 150 | Loss: 0.54809, Accuracy: 83.62% | Test loss: 0.54587, Test acc: 83.50%

Epoch: 160 | Loss: 0.54350, Accuracy: 83.88% | Test loss: 0.54145, Test acc: 83.50%

Epoch: 170 | Loss: 0.53880, Accuracy: 84.00% | Test loss: 0.53693, Test acc: 84.00%

Epoch: 180 | Loss: 0.53392, Accuracy: 84.25% | Test loss: 0.53226, Test acc: 84.00%

Epoch: 190 | Loss: 0.52891, Accuracy: 84.38% | Test loss: 0.52745, Test acc: 84.00%

Epoch: 200 | Loss: 0.52379, Accuracy: 84.50% | Test loss: 0.52258, Test acc: 84.00%

Epoch: 210 | Loss: 0.51856, Accuracy: 85.00% | Test loss: 0.51765, Test acc: 84.00%

Epoch: 220 | Loss: 0.51325, Accuracy: 85.38% | Test loss: 0.51263, Test acc: 84.50%

Epoch: 230 | Loss: 0.50789, Accuracy: 85.75% | Test loss: 0.50757, Test acc: 84.50%

Epoch: 240 | Loss: 0.50241, Accuracy: 86.12% | Test loss: 0.50248, Test acc: 84.50%

...

Epoch: 460 | Loss: 0.36920, Accuracy: 91.00% | Test loss: 0.38700, Test acc: 89.00%

Epoch: 470 | Loss: 0.36351, Accuracy: 91.25% | Test loss: 0.38228, Test acc: 89.00%

Epoch: 480 | Loss: 0.35778, Accuracy: 91.38% | Test loss: 0.37786, Test acc: 89.00%

Epoch: 490 | Loss: 0.35200, Accuracy: 91.62% | Test loss: 0.37374, Test acc: 89.00%

CirclePredictionNonLinear(

(layer1): Linear(in_features=2, out_features=8, bias=True)

(layer2): Linear(in_features=8, out_features=5, bias=True)

(layer3): Linear(in_features=5, out_features=3, bias=True)

(layer4): Linear(in_features=3, out_features=1, bias=True)

(relu): ReLU()

)

And the last call

Output exceeds the size limit. Open the full output data in a text editorEpoch: 0 | Loss: 0.34634, Accuracy: 91.62% | Test loss: 0.36953, Test acc: 90.00%

Epoch: 10 | Loss: 0.34066, Accuracy: 91.88% | Test loss: 0.36541, Test acc: 90.00%

Epoch: 20 | Loss: 0.33518, Accuracy: 92.00% | Test loss: 0.36125, Test acc: 90.00%

Epoch: 30 | Loss: 0.32981, Accuracy: 92.12% | Test loss: 0.35709, Test acc: 90.00%

Epoch: 40 | Loss: 0.32450, Accuracy: 92.12% | Test loss: 0.35301, Test acc: 90.00%

Epoch: 50 | Loss: 0.31925, Accuracy: 92.25% | Test loss: 0.34907, Test acc: 90.00%

Epoch: 60 | Loss: 0.31406, Accuracy: 92.25% | Test loss: 0.34522, Test acc: 90.00%

Epoch: 70 | Loss: 0.30882, Accuracy: 92.38% | Test loss: 0.34156, Test acc: 90.00%

Epoch: 80 | Loss: 0.30361, Accuracy: 92.75% | Test loss: 0.33790, Test acc: 90.00%

Epoch: 90 | Loss: 0.29847, Accuracy: 92.88% | Test loss: 0.33419, Test acc: 90.00%

Epoch: 100 | Loss: 0.29340, Accuracy: 93.12% | Test loss: 0.33047, Test acc: 90.00%

Epoch: 110 | Loss: 0.28836, Accuracy: 93.12% | Test loss: 0.32668, Test acc: 90.00%

Epoch: 120 | Loss: 0.28344, Accuracy: 93.12% | Test loss: 0.32291, Test acc: 90.00%

Epoch: 130 | Loss: 0.27847, Accuracy: 93.50% | Test loss: 0.31906, Test acc: 90.00%

Epoch: 140 | Loss: 0.27358, Accuracy: 93.50% | Test loss: 0.31508, Test acc: 90.00%

Epoch: 150 | Loss: 0.26866, Accuracy: 93.50% | Test loss: 0.31101, Test acc: 90.50%

Epoch: 160 | Loss: 0.26372, Accuracy: 93.62% | Test loss: 0.30680, Test acc: 91.00%

Epoch: 170 | Loss: 0.25888, Accuracy: 93.88% | Test loss: 0.30235, Test acc: 91.00%

Epoch: 180 | Loss: 0.25390, Accuracy: 94.00% | Test loss: 0.29788, Test acc: 91.00%

Epoch: 190 | Loss: 0.24888, Accuracy: 94.00% | Test loss: 0.29310, Test acc: 91.00%

Epoch: 200 | Loss: 0.24392, Accuracy: 94.12% | Test loss: 0.28803, Test acc: 91.00%

Epoch: 210 | Loss: 0.23902, Accuracy: 94.25% | Test loss: 0.28296, Test acc: 91.00%

Epoch: 220 | Loss: 0.23402, Accuracy: 94.50% | Test loss: 0.27763, Test acc: 91.00%

Epoch: 230 | Loss: 0.22910, Accuracy: 94.75% | Test loss: 0.27222, Test acc: 91.00%

Epoch: 240 | Loss: 0.22411, Accuracy: 94.88% | Test loss: 0.26646, Test acc: 91.50%

...

Epoch: 460 | Loss: 0.11658, Accuracy: 99.62% | Test loss: 0.13017, Test acc: 100.00%

Epoch: 470 | Loss: 0.11262, Accuracy: 99.88% | Test loss: 0.12518, Test acc: 100.00%

Epoch: 480 | Loss: 0.10882, Accuracy: 99.88% | Test loss: 0.12047, Test acc: 100.00%

Epoch: 490 | Loss: 0.10512, Accuracy: 99.88% | Test loss: 0.11594, Test acc: 100.00%

CirclePredictionNonLinear(

(layer1): Linear(in_features=2, out_features=8, bias=True)

(layer2): Linear(in_features=8, out_features=5, bias=True)

(layer3): Linear(in_features=5, out_features=3, bias=True)

(layer4): Linear(in_features=3, out_features=1, bias=True)

(relu): ReLU()

)

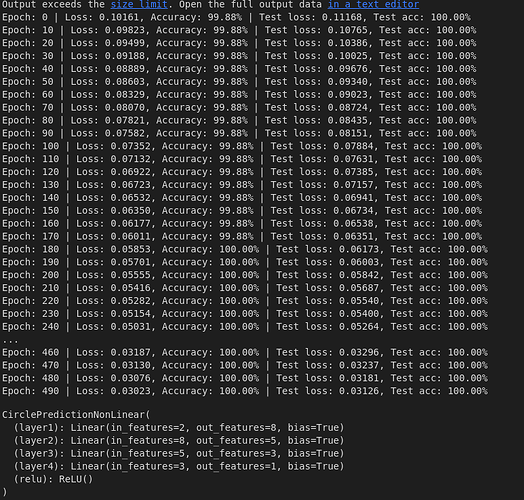

Now that it has achieved 100% let’s call once more

Output exceeds the size limit. Open the full output data in a text editorEpoch: 0 | Loss: 0.10161, Accuracy: 99.88% | Test loss: 0.11168, Test acc: 100.00%

Epoch: 10 | Loss: 0.09823, Accuracy: 99.88% | Test loss: 0.10765, Test acc: 100.00%

Epoch: 20 | Loss: 0.09499, Accuracy: 99.88% | Test loss: 0.10386, Test acc: 100.00%

Epoch: 30 | Loss: 0.09188, Accuracy: 99.88% | Test loss: 0.10025, Test acc: 100.00%

Epoch: 40 | Loss: 0.08889, Accuracy: 99.88% | Test loss: 0.09676, Test acc: 100.00%

Epoch: 50 | Loss: 0.08603, Accuracy: 99.88% | Test loss: 0.09340, Test acc: 100.00%

Epoch: 60 | Loss: 0.08329, Accuracy: 99.88% | Test loss: 0.09023, Test acc: 100.00%

Epoch: 70 | Loss: 0.08070, Accuracy: 99.88% | Test loss: 0.08724, Test acc: 100.00%

Epoch: 80 | Loss: 0.07821, Accuracy: 99.88% | Test loss: 0.08435, Test acc: 100.00%

Epoch: 90 | Loss: 0.07582, Accuracy: 99.88% | Test loss: 0.08151, Test acc: 100.00%

Epoch: 100 | Loss: 0.07352, Accuracy: 99.88% | Test loss: 0.07884, Test acc: 100.00%

Epoch: 110 | Loss: 0.07132, Accuracy: 99.88% | Test loss: 0.07631, Test acc: 100.00%

Epoch: 120 | Loss: 0.06922, Accuracy: 99.88% | Test loss: 0.07385, Test acc: 100.00%

Epoch: 130 | Loss: 0.06723, Accuracy: 99.88% | Test loss: 0.07157, Test acc: 100.00%

Epoch: 140 | Loss: 0.06532, Accuracy: 99.88% | Test loss: 0.06941, Test acc: 100.00%

Epoch: 150 | Loss: 0.06350, Accuracy: 99.88% | Test loss: 0.06734, Test acc: 100.00%

Epoch: 160 | Loss: 0.06177, Accuracy: 99.88% | Test loss: 0.06538, Test acc: 100.00%

Epoch: 170 | Loss: 0.06011, Accuracy: 99.88% | Test loss: 0.06351, Test acc: 100.00%

Epoch: 180 | Loss: 0.05853, Accuracy: 100.00% | Test loss: 0.06173, Test acc: 100.00%

Epoch: 190 | Loss: 0.05701, Accuracy: 100.00% | Test loss: 0.06003, Test acc: 100.00%

Epoch: 200 | Loss: 0.05555, Accuracy: 100.00% | Test loss: 0.05842, Test acc: 100.00%

Epoch: 210 | Loss: 0.05416, Accuracy: 100.00% | Test loss: 0.05687, Test acc: 100.00%

Epoch: 220 | Loss: 0.05282, Accuracy: 100.00% | Test loss: 0.05540, Test acc: 100.00%

Epoch: 230 | Loss: 0.05154, Accuracy: 100.00% | Test loss: 0.05400, Test acc: 100.00%

Epoch: 240 | Loss: 0.05031, Accuracy: 100.00% | Test loss: 0.05264, Test acc: 100.00%

...

Epoch: 460 | Loss: 0.03187, Accuracy: 100.00% | Test loss: 0.03296, Test acc: 100.00%

Epoch: 470 | Loss: 0.03130, Accuracy: 100.00% | Test loss: 0.03237, Test acc: 100.00%

Epoch: 480 | Loss: 0.03076, Accuracy: 100.00% | Test loss: 0.03181, Test acc: 100.00%

Epoch: 490 | Loss: 0.03023, Accuracy: 100.00% | Test loss: 0.03126, Test acc: 100.00%

CirclePredictionNonLinear(

(layer1): Linear(in_features=2, out_features=8, bias=True)

(layer2): Linear(in_features=8, out_features=5, bias=True)

(layer3): Linear(in_features=5, out_features=3, bias=True)

(layer4): Linear(in_features=3, out_features=1, bias=True)

(relu): ReLU()

)

You can observe that the accuracy at 0th epoch is continuosly increasing and achieves 100% directly for the last one , now the data can’t be too simple as the very first inital call it was barely in 50s