I want to copy the gradients of loss, with respect to weight, for different data samples using pytorch. In the code below, I am iterating one sample each time from the data loader (batch size = 1) and collecting gradients for 1st fully connected (fc1) layer. Gradients should be different for different samples. The print function shows correct gradients, which are different for different samples. But when I store them in a list, I get the same gradients repeatedly. Any suggestions would be much appreciated. Thanks in advance!

grad_list = [ ]

for data in test_loader:

inputs, labels = data[0], data[1]

inputs = torch.autograd.Variable(inputs)

labels = torch.autograd.Variable(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward

output = target_model(inputs)

loss = criterion(output, labels)

loss.backward()

grad_list.append(target_model.fc1.weight.grad.data)

print(target_model.fc1.weight.grad.data)

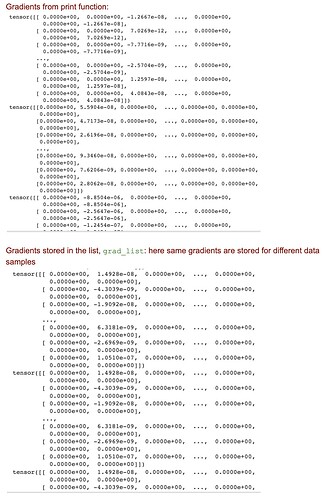

Gradients from the print() and list, grad_list, are shown below: