Good day!

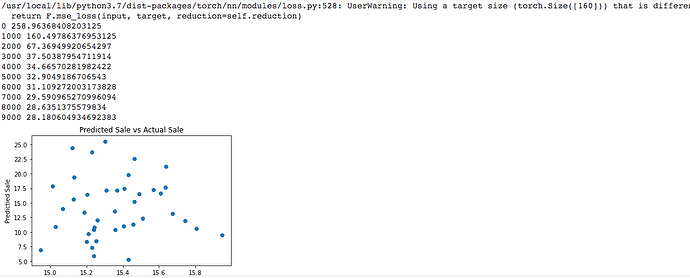

Im new in pytorch and i am currently learning pytorch from a tutorial. The tutorial uses the kaggle advertising dataset here: Advertising Dataset | Kaggle. the Dataset has 4 columns and the last column is the one that needs prediction. In a normal model, it would be using a Linear Regression and R^2 would be around 80 to 90%. In the tutorial, the loss function decreases exponentially down (124, 32, 8, 6, 4, 3, 2, 1, 0). The teacher ended up with a 95% R^2 Score. However, when I copied the code down, it was returning a way bigger error (160, 67, 37, 34, 32, 31, 29, 28, 28). I even ended up with a -0.015 R^2. What could be the explanation for this? the code used is as shown below:

from sklearn import preprocessing

from sklearn.model_selection import train_test_split

import torch

import torch.optim as optim

import matplotlib.pyplot as plt

#Getting Data

advertising_data = pd.read_csv('advertising.csv')

#Scaling Data

advertising_data[['TV']] = preprocessing.scale(advertising_data[['TV']])

advertising_data[['Radio']] = preprocessing.scale(advertising_data[['Radio']])

advertising_data[['Newspaper']] = preprocessing.scale(advertising_data[['Newspaper']])

#Shuffling Data

advertising_data = advertising_data.sample(frac=1)

#Setting Features and Target

X = advertising_data.drop('Sales', axis=1)

Y = advertising_data[['Sales']]

#splitting Data

x_train, x_test, y_train ,y_test = train_test_split(X,y,test_size=0.2, random_state=0)

#Creating the Tensors

x_train_tensor = torch.tensor(x_train.values, dtype=torch.float)

x_test_tensor = torch.tensor(x_test.values, dtype=torch.float)

y_train_tensor = torch.tensor(y_train.values, dtype=torch.float)

y_test_tensor = torch.tensor(y_test.values, dtype=torch.float)

#Defining the Parameters

inp = 3

out = 1

hid = 100

loss_fn = torch.nn.MSELoss()

learning_rate = 0.0001

#Defining Model and Optimizer

model = torch.nn.Sequential(torch.nn.Linear(inp, hid),

torch.nn.ReLU(),

torch.nn.Linear(hid, out))

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

#Training with 10000 epochs

for iter in range(10000):

y_pred = model(x_train_tensor)

loss = loss_fn(y_pred, y_train_tensor)

if iter % 1000 == 0:

print(iter, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

#Creating the predicted value and putting tensor into a numpy array

y_pred_tensor = model(x_test_tensor)

y_pred = y_pred_tensor.detach().numpy()

#Plotting the Prediction vs the Actual

plt.scatter(y_pred, y_test.values)

plt.xlabel('Actual Sale')

plt.ylabel('Predictied Sale')

plt.title('Predicted Sale vs Actual Sale')

plt.show()

I would end up with the Following outputs: