Training set input size: torch.Size([19400, 1, 6, 100])

Training set labels size: torch.Size([19400, 5])

Test set input size: torch.Size([4851, 1, 6, 100])

Test set labels size: torch.Size([4851, 5])

Batch Size: 264

I am using a data loader to load this data to a neural network with an architecture as coded below:

class ConvUIL(nn.Module):

input_size = [6,100]

output_size = 5

input_channels = 1

channels_conv1 = 16

channels_conv2 = 48

kernel_conv1 = [1, 13]

kernel_conv2 = [1, 13]

pool_conv1 = [1, 2]

pool_conv2 = [1, 2]

channels_conv3 = 96

channels_conv4 = 128

channels_conv5 = 264

kernel_conv3 = [1, 11]

kernel_conv4 = [1, 3]

kernel_conv5 = [1, 3]

fcl1_size = 50

def __init__(self):

super(ConvUIL, self).__init__()

# Define the convolutional layers

self.conv1 = nn.Conv2d(self.input_channels, self.channels_conv1, self.kernel_conv1)

self.conv2 = nn.Conv2d(self.channels_conv1, self.channels_conv2, self.kernel_conv2)

self.conv3 = nn.Conv2d(self.channels_conv2, self.channels_conv3, self.kernel_conv3)

self.conv4 = nn.Conv2d(self.channels_conv3, self.channels_conv4, self.kernel_conv4)

self.conv5 = nn.Conv2d(self.channels_conv4, self.channels_conv5, self.kernel_conv5)

# Calculate the convolutional layers output size (stride = 1)

self.conv_out_size = int(264*6*2)

# Define the fully connected layers

self.fcl1 = nn.Linear(self.conv_out_size, self.fcl1_size)

self.fcl2 = nn.Linear(self.fcl1_size, self.output_size)

def forward(self, x):

# Apply convolution 1 and pooling

x = self.conv1(x)

x = F.relu(x)

x = F.max_pool2d(x, self.pool_conv1)

# Apply convolution 2 and pooling

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, self.pool_conv2)

# Apply convolution 3

x = self.conv3(x)

x = F.relu(x)

# Apply convolution 4

x = self.conv4(x)

x = F.relu(x)

# Apply convolution 5

x = self.conv5(x)

x = F.relu(x)

# Reshape x to one dimmension to use as input for the fully connected layers

x = x.view(-1, self.conv_out_size)

# Fully connected layers

x = self.fcl1(x)

x = F.relu(x)

x = self.fcl2(x)

return F.log_softmax(x, dim=1)

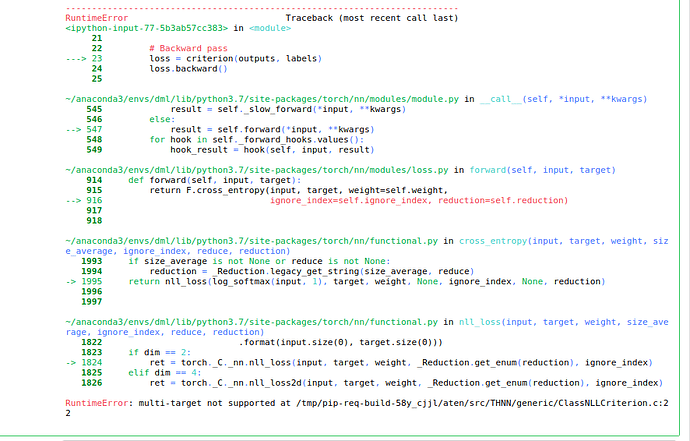

criterion = nn.NLLLoss()

optimizer = optim.Adam(model.parameters(), lr=0.01)

from torch.autograd import Variable

epochs = 20

for epoch in range(epochs):

loss = 0

correct = 0

total = wind_test_y.size(0)

# Train the model

for i, (observations, labels) in enumerate(train_dataset):

observations = Variable(observations)

labels = Variable(labels)

# Forward pass

optimizer.zero_grad()

outputs = convUIL(observations)

print(outputs)

print(labels)

# Backward pass

loss = criterion(outputs, labels)

loss.backward()

# Optimize

optimizer.step()

# Test the model on the validation data

for observations, labels in test_dataset:

observations = Variable(observations)

# Forward pass

outputs = convUIL(observations)

_, predicted = torch.max(outputs.data, 1)

correct = (predicted == labels).sum()

accuracy = correct / total

print('Epoch [%2d/%2d], Accuracy: %.4f' % (epoch + 1, epochs, accuracy))