I am facing some issues with training a network with multiple outputs .It is an image classification problem.Every image belongs to one of ten subclasses from two classes.Thus the

labels vector is a tensor of size [60,2,10](Note Here 60 corresponds to the Batch_size)

.For this model i am implementing the LeNet Architecture with two output tensors and backpropagating the combined loss.

Here is my model

class LeNet(nn.Module):

def __init__(self):

super(LeNet,self).__init__()

self.cnn_model=nn.Sequential(nn.Conv2d(1,6,5),

nn.ReLU(),

nn.AvgPool2d(2,stride=2),

nn.Conv2d(6,16,5),

nn.ReLU(),

nn.AvgPool2d(2,stride=2))

self.fc_model=nn.Sequential(

nn.Linear(400,120),

nn.LeakyReLU(),

nn.Linear(120,84),

nn.LeakyReLU(),

nn.Linear(84,10))

def forward(self,x):

x1=self.cnn_model(x)

x1=x1.view(x1.size(0),-1)

x1=self.fc_model(x1)

x2=self.cnn_model(x)

x2=x2.view(x2.size(0),-1)

x2=self.fc_model(x2)

return x1,x2

net=LeNet().to(device)

import torch.optim as optim

loss_fn=nn.CrossEntropyLoss()

opt=optim.Adam(net.parameters())

This is my training loop

%%time

loss_arr=[]

loss_epoch_arr=[]

epochs =25

for epoch in range(epochs):

for i,data in enumerate(train_loader,0):

inputs,labels=data

inputs,labels=inputs.to(device),labels.to(device)

opt.zero_grad()

out1,out2=net(inputs)

loss1=loss_fn(out1,labels[:,0,:].long())

loss2=loss_fn(out2,labels[:,1,:].long())

loss=loss1+loss2

loss.backward()

opt.step()

loss_arr.append(loss.item())

loss_epoch_arr.append(loss.item())

print("Epoch:%d/%d,Test_Acc: %0.2f,Train_Acc: %0.2f"%(epoch,epochs,evaluation(testloader),evaluation(trainloader)))

plt.plot(loss_epoch_arr)

plt.show()

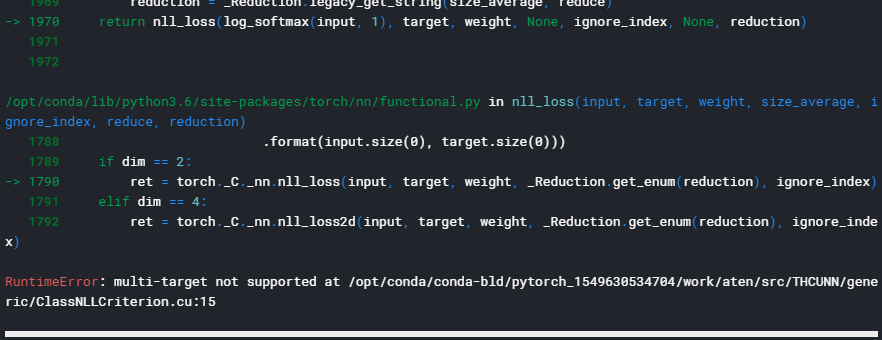

But i am getting the following error

Thanks i in advance if you have any suggestions please provide some code as i am a beginner