Thank you very much for your answer Thomas V.

The article [1] shows different ways to deal with this problem, one of these is:

U-ones, where the values of Uncertain (2) are converted to ones (1).

U-zeros, where the values of Uncertain (2) are converted to zeros (0).

U-Multiclass, where the values of Uncertain (2) are taken as a single class.

For multiclass the way I approach it is by applying the following code (One-hot Encoding).

labels = torch.tensor([0., 0., 1., 1., 0., 2., 0., 2., 0., 0., 0., 0., 0., 1.])

labels = labels.type(torch.int64)

labels = labels.unsqueeze(0)

target = torch.zeros(labels.size(0), 14).scatter_(1, labels, 1.)

After training the results are good for U-zeros and U-ones, but they are not good for U-Multiclass, (it is necessary to mention that the set of images for testing does not contain Uncertain (2) labels).

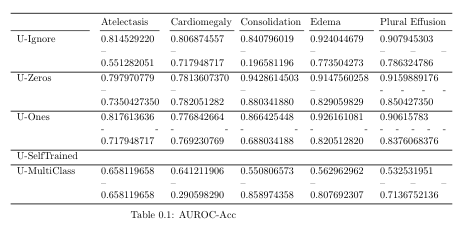

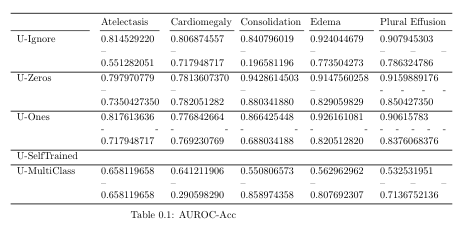

The following figure shows the results obtained for 5 of the 14 classes.

If we look at the One Hot Encoding tensor,

labels = [0., 0., 1., 1., 0., 2., 0., 2., 0., 0., 0., 0., 0., 1.]

One_Hot_encoding_labels = [1., 1., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.]

It only mentions that there are three different possible labels 0,1,2, but it does not correctly represent the other pathologist, so is the One-Hot Encoding done correctly?

[1] Jeremy Irvin and Pranav Rajpurkar and Michael Ko and Yifan Yu and Silviana Ciurea-Ilcus and Chris Chute and Henrik Marklund and Behzad Haghgoo and Robyn Ball and Katie Shpanskaya and Jayne Seekins and David A. Mong and Safwan S. Halabi and Jesse K. Sandberg and Ricky Jones and David B. Larson and Curtis P. Langlotz and Bhavik N. Patel and Matthew P. Lungren and Andrew Y. Ng : CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison (2019), arXiv.