This is my code:

import torch

import torchvision

from torchvision import transforms, datasets

import torch.nn as nn

import torch.nn.functional as f

import torch.optim as optim

import os

import cv2

import numpy as np

from tqdm import tqdm

rebuild_data = True

class cats_and_dogs():

image_size = 50

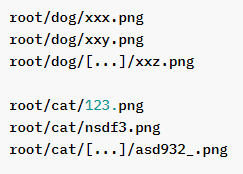

cats = “PetImages/Cat”

dogs = “PetImages/Dog”

labels = {cats: 0, dogs: 1}

training_data = []

catcount = 0

dogcount = 0

def make_training_data(self):

for label in self.labels:

print(label)

for f in tqdm(os.listdir(label)):

try:

path = os.path.join(label, f)

img = cv2.imread(path, cv2.IMREAD_GRAYSCALE)

img = cv2.resize(img, (self.IMG_SIZE, self.IMG_SIZE))

self.training_data.append([np.array(img), np.eye(2)[self.labels[label]]])

if label == self.cats:

self.catcount += 1

elif label == self.dogs:

self.dogcount += 1

except Exception as e:

pass

np.random.shuffle(self.training_data)

np.save("training_data.npy", self.training_data)

print("cats: ", self.catcount)

print("dogs: ", self.dogcount)

if rebuild_data == True:

Dogs_and_Cats = cats_and_dogs()

Dogs_and_Cats.make_training_data()

and these are the errors:

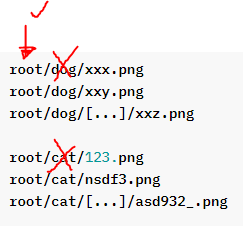

PetImages/Cat

Traceback (most recent call last):

File “/Users/edenbrown/sample.ws45/new.py”, line 51, in

Dogs_and_Cats.make_training_data()

File “/Users/edenbrown/sample.ws45/new.py”, line 27, in make_training_data

for f in tqdm(os.listdir(label)):

FileNotFoundError: [Errno 2] No such file or directory: ‘PetImages/Cat’