A simple example will show how my NN works. Usually NN takes a step, then we give a label and do backprob. In my case, NN takes 10 steps (for example), after that I count how many points NN earned. If NN earned minus 5 points, then I need to reduce the gradient at all 10 steps, and if I gained NN plus 5 points, then I need to increase the gradient. How do I remember gradients and do backprob correctly?

Correct me, if I am wrong. Are you referring to batch size. Like to calculate loss for 10 inputs and then do backprop?

If it is, then you can use batch size. It calculate loss for batch size (like 10) and calculate gradient and then backprop.

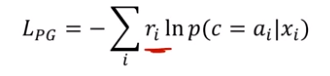

You correctly understood the size of the party, this is the number of steps. I have 3 neurons in the NN output. I used to use CrossEntropyLoss. In this situation, NN scored minus 5 points, then for those neurons that showed the highest weights, I need to reduce the weight, and for the other two, increase. For example, for one neuron I decrease by 1, and for two I increase by 0.5. I can’t make such an implementation, I don’t understand how to adjust the weights manually.

I need to put such a formula after softmax. How to do it?