I don’t understand why I get negative values for the training and validation loss.

Can someone please explain, if it is apparent in the code:

These are the models:

class EncoderRNN(nn.Module):

def __init__(self, enbedding_size, hidden_size):

super(EncoderRNN, self).__init__()

self.hidden_size = hidden_size

self.lstm = nn.LSTM(enbedding_size, hidden_size,

batch_first=True,

dropout=dropout,

num_layers=LSTM_LAYERS,

bidirectional=False)

def forward(self, embeds):

output, (hidden, cell_state) = self.lstm(embeds)

return hidden, cell_state

class DecoderRNN(nn.Module):

def __init__(self, input_size, embedding_size, hidden_size, output_size):

super(DecoderRNN, self).__init__()

self.hidden_size = hidden_size

self.embedding = nn.Embedding(input_size, embedding_size)

self.lstm = nn.LSTM(embedding_size, hidden_size,

batch_first=False,

dropout=dropout,

num_layers=LSTM_LAYERS,

bidirectional=False)

self.out = nn.Linear(hidden_size, output_size)

def forward(self, inp, hidden, cell_state):

inp = inp.unsqueeze(0)

embs = self.embedding(inp)

# output = F.relu(output)

output, (hidden, cell_state) = self.lstm(embs, (hidden, cell_state))

output = self.out(output)

predictions = output.squeeze(0)

return predictions, hidden, cell_state

class Seq2Seq(nn.Module):

def __init__(self, Encoder_LSTM, Decoder_LSTM, len_vocab):

super(Seq2Seq, self).__init__()

self.Encoder_LSTM = Encoder_LSTM

self.Decoder_LSTM = Decoder_LSTM

self.len_vocab = len_vocab

def forward(self, source, target, tfr=0.5):

batch_size = source.shape[0]

target_len = target.shape[1]

target_vocab_size = self.len_vocab

outputs = torch.zeros(target_len, batch_size, target_vocab_size).to(device)

hidden_state_encoder, cell_state_encoder = self.Encoder_LSTM(source)

x = target[torch.arange(target.size(0)), 0]

for i in range(1, target_len):

output, hidden_state_decoder, cell_state_decoder = self.Decoder_LSTM(x, hidden_state_encoder,

cell_state_encoder)

outputs[i] = output

best_guess = output.argmax(1) # 0th dimension is batch size, 1st dimension is word embedding

x = target[torch.arange(target.size(

0)), i] if random.random() < tfr else best_guess # Either pass the next word correctly from the dataset or use the earlier predicted word

return outputs

This is the training and validation loop:

encoder = EncoderRNN(EMBEDDING_DIM, HIDDEN_DIM).to(device)

decoder = DecoderRNN(len(word_to_ix), EMBEDDING_DIM, HIDDEN_DIM, len(word_to_ix)).to(device)

seq2seq = Seq2Seq(encoder, decoder, len(word_to_ix))

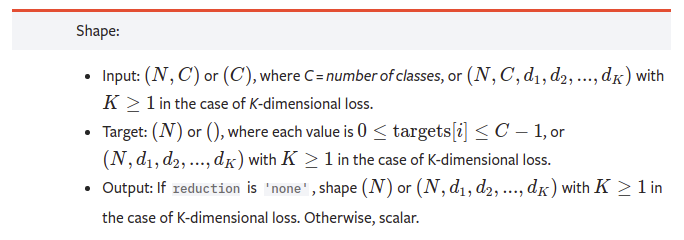

loss_function = nn.NLLLoss()

optimizer = optim.AdamW(seq2seq.parameters(), lr=learning_rate, eps=adamw_eps)

loss_values, validation_loss_values, validation_accuracy, val_f1_scores = [], [], [], []

for epoch in trange(epochs, desc="Epoch"):

seq2seq.train()

total_loss = 0

train_pred, train_true, train_token_ids = [], [], []

for step, batch in enumerate(train_loader):

token_idx = batch[0][0].tolist()

doc_tokens = []

for doc in token_idx:

doc_tokens.append(get_elem_from_index(doc, ix_to_word))

embs = []

for doc in doc_tokens:

embs.append(embedding_model(doc))

docs = torch.FloatTensor(embs).to(device)

output = batch[1].to(device)

seq2seq.zero_grad()

pred_output = seq2seq(docs, output)

pred_output = pred_output.permute((1, 2, 0)) # (batchsize, num of tokens, num of classes)

loss = loss_function(pred_output, output)

loss.backward()

total_loss += loss.item()

optimizer.step()

avg_train_loss = total_loss / len(train_loader)

print("Average train loss: {}".format(avg_train_loss))

loss_values.append(avg_train_loss)

seq2seq.eval()

val_loss, val_accuracy = 0, 0

val_rouge2gram = 0

for batch in valid_loader:

val_token_idx = batch[0][0].tolist()

val_doc_tokens = []

for doc in val_token_idx:

val_doc_tokens.append(get_elem_from_index(doc, ix_to_word))

val_embs = []

for doc in val_doc_tokens:

val_embs.append(embedding_model(doc))

val_docs = torch.FloatTensor(val_embs).to(device)

val_output = batch[1].to(device)

with torch.no_grad():

pred_output = seq2seq(val_docs, val_output)

val_pred_output = pred_output.permute((1, 2, 0)) # (batchsize, num of classes, num of tokens)

val_loss = loss_function(val_pred_output, val_output).item()

average_val_loss = val_loss / len(valid_loader)

validation_loss_values.append(average_val_loss)

print("Validation loss: {}".format(average_val_loss))

Thank you very much!