First im new to pytorh and DL, I want to create a simple non linear regression model, but apparently is not converging, i tried to change some hyperparams without sucess. This is the code, i guess im making wrong something obvius.

import torch

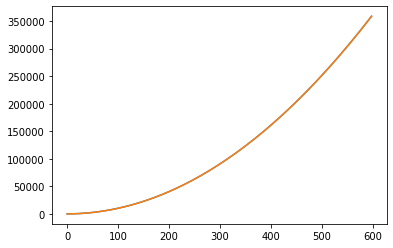

x1 = torch.arange(1,600,1, dtype=torch.float32).view(-1,1)

y1 = x1*x1

model = torch.nn.Sequential(

torch.nn.Linear(1,500),

torch.nn.ReLU(),

torch.nn.Linear(500,1)

)

criterion = torch.nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.001)

for i in range (10000):

y_pred = model(x1)

loss = criterion(y_pred, y1)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i%100 == 0:

print(loss)

print(model(torch.tensor([6], dtype=torch.float32)))