Well… I have 2 global problems , may be I have other problems, but I didnt found they and it’s not SO important (except optimization).

I tried to train models like: base upscale (2x/4x), remove JPG defects.

(**All details of code at the bottom. **)

Problem 1:

After I trained model and test it, output looks normal in general, BUT it have artifacts on edges of image.

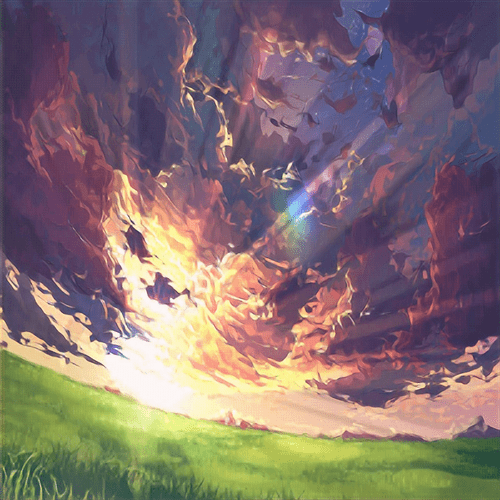

Example (input/output/target - from tests):

This artfacts small: ~ 5 - 20 px, and I could leave this, but if I don’t upscale whole image (I cant do this with big img like full hd to 4k), I upscale parts of image. So, in this situation this small artifact is more important.

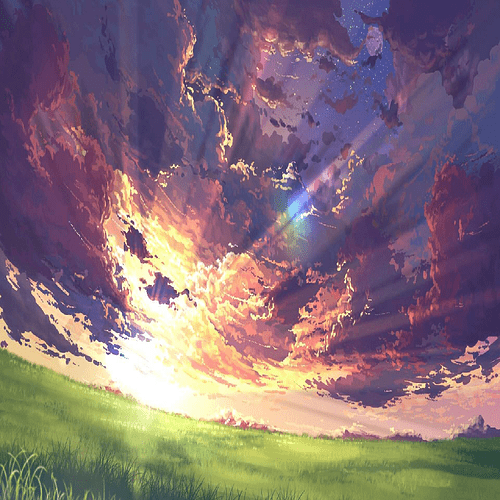

Example:

Problem 2:

I have artifacts looks like gauss noise. I think that it is because my dataset is not best, I made it by myself, and it has not many images with some colors (so artifacts occur on images which have seldom colors). But may be it haw artifacts by another thing, therefore mentioned this.

Now I have my dataset (100 000 images) + another dataset (350 000 images), but I don’t use them all always, because it is too long

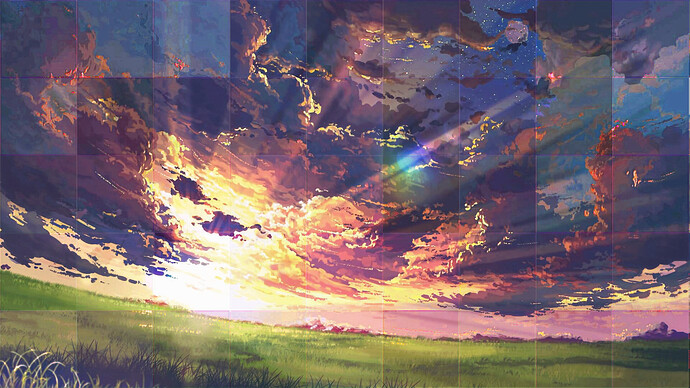

Example (model output/target with gauss noise) - it's after 100 000 images with 5 epochs:

target is bigger beacuse I resize inputs for the model, so that give max 800x800 image (other OOM error)

My question for both problems: Can I fix it and how? I just need to have more training, or something else? Or I have mistakes on layers, or preparing my dataset? I can’t check them all by myself because the processing will take 30 days on my computer with my code. XD

IMPORTANT: If you saw such a discussion with the same question and decision, you don’t have to explain it to me, just give the link to that, and I’ll check it.

And if you see some mistakes with optimization (I know - they are), please indicate this if it’s not difficult for you. It’s very important for me, because… Come on, 30 DAYS…

My code

“ngf” I always do 64.

Model (example for 4x).

Model’s layers always looks like :

self.g0 = nn.Sequential(

#nn.Dropout2d(p=0.2),

nn.Conv2d(3, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

)

self.g1 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True)

)

self.main1 = nn.Sequential(

nn.ConvTranspose2d(ngf * 4, ngf * 4, 4, stride=2, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

)

self.g2 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

)

self.main2 = nn.Sequential(

nn.ConvTranspose2d(ngf * 4, ngf, 4, stride=2, padding=1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

nn.Conv2d(ngf, 3, 3, stride=1, padding=1, bias=False),

nn.Tanh()

)

Forward function like this:

def forward(self, X):

X = self.g0(X)

X = self.g1(X) + X

X = self.main1(X)

X = self.g2(X) + X

X = self.main2(X)

return X

And training step:

def training_step(self, batch, batch_idx):

x, _ = batch

x = x.view(self.hparams['batch_size'], x.size()[-3], x.size()[-2], x.size()[-1]).to(self.device)

#x = F.interpolate(x, size=(int(y[0]), int(y[1])), mode='bicubic', align_corners=False)

g = self.generator(F.interpolate(x, size=(x.size()[-2] // self.increase, x.size()[-1] // self.increase), mode='bicubic', align_corners=False))

#########################____GRADS____############################

self.generator.zero_grad()

g_real_loss = criterion(g, F.interpolate(x, size=(x.size()[-2] // self.increase * self.increase, x.size()[-1] // self.increase * self.increase), mode='bicubic', align_corners=False))

g_real_loss.backward(retain_graph=True)

self.opt_g.step()

###################################################################

Exaple for “remove defects”:

Model's layers:

self.ngf = ngf

self.g0 = nn.Sequential(

#nn.Dropout2d(p=0.2),

nn.Conv2d(3, ngf*4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf*4),

nn.ReLU(inplace=False),

)

self.g1 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

nn.Conv2d(ngf * 4, ngf *4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False)

)

self.main1 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

# state size. (ngf*8) x 4 x 4

)

self.g2 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

)

self.main2 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf*4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf*4),

nn.ReLU(inplace=False),

nn.Conv2d(ngf*4, 3, 3, stride=1, padding=1, bias=False),

nn.Tanh()

)

And train step, here I have 3 versions of this step, where I just train whole resized image,

where I cut image and train with these parts, or I cuts image, put parts into forward, but loss get by criterion of all of parts and whole target image (I cuts it double, because first cut for exclude OOM error, and next to train by parts). I choose this, because if I do this, it dont require many resources in forward.

I commented parts of old versions step

def training_step_crop(self, batch, batch_idx):

x, y = batch

big_res = max(round(x.size()[-2] / self.hparams['max_input'][0] + 0.5), round(x.size()[-1] / self.hparams['max_input'][1] + 0.5))

for r0 in range(big_res):

for r1 in range(big_res):

big_tmp_x = torch.chunk(x,big_res,dim=-2)[r0]

big_tmp_x = torch.chunk(big_tmp_x,big_res,dim=-1)[r1]

big_tmp_y = torch.chunk(y,big_res,dim=-2)[r0]

big_tmp_y = torch.chunk(big_tmp_y,big_res,dim=-1)[r1]

res = max(round(big_tmp_x.size()[-2] / self.hparams['max_crop'][0] + 0.5), round(big_tmp_x.size()[-1] / self.hparams['max_crop'][1] + 0.5))

outs = []

for it0 in range(res):

out0 = []

for it1 in range(res):

tmp_x = torch.chunk(big_tmp_x,res,dim=-2)[it0]

tmp_x = torch.chunk(tmp_x,res,dim=-1)[it1]

#tmp_y = torch.chunk(y,res,dim=-2)[it0]

#tmp_y = torch.chunk(tmp_y,res,dim=-1)[it1]

#print('Memory:', get_gpu_memory_map()) - I chaked memory...

tmp_x = tmp_x.view(1, 3, tmp_x.size()[-2], tmp_x.size()[-1]).to(self.device)

#tmp_y = tmp_y.view(1, 3, tmp_y.size()[-2], tmp_y.size()[-1]).to(self.device)

tmp_x = self.generator(tmp_x)

out0.append(tmp_x.cpu())

#########################__GENERATOR__#############################

#self.generator.zero_grad()

#loss = self.criterion(tmp_x, tmp_y)

#loss.backward(retain_graph=True)

#self.opt.step()

###################################################################

del tmp_x

#del tmp_y

outs.append(torch.cat(out0, 3))

del out0

outs = torch.cat(outs, 2)#.clone()

#####################################

self.generator.zero_grad()

loss = self.criterion(outs.cpu(),big_tmp_y)

loss.backward()

self.opt.step()

#####################################

del outs

torch.cuda.empty_cache()

#with torch.cuda.device('cuda'):

#torch.cuda.empty_cache()

My dataset (~same for both models):

“RandomPilTransforms” - is my class of same flips and deformations for sequential of images (so, here it useless, but still…)

I use save image with small quality on JPG and load it again, for simulation JPG defects, because I didn’t found function, which do this without save. If you know same functions, I’ll be glad to hear about it.

class MyDataset(Dataset):

def __init__(self, path, input_size, quality=50, max_len=False, save_path=r'data\cash'):

RPTargs = {

'perspective': {

'deformation': 0.5,

'chance': 0.5,

'resample': Image.BICUBIC,

'fill': 0,

'fillcolor': None

},

'flip_horizontal': 0.5,

'flip_vertical': 0.5,

'rotate_right': 0.5,

'rotate_left': 0.5

}

self.transform = RandomPilTransforms(**RPTargs)

self.path = path

self.quality = quality

self.save_path = save_path

self.piltotensor = transforms.ToTensor()

self.input_size = input_size

self.names = []

for dirpath,_,filenames in os.walk(path):

for f in filenames:

self.names.append(os.path.abspath(os.path.join(dirpath, f)))

random.shuffle(self.names, random.seed())

print('')

print('All dataset:', len(self.names))

self.len = len(self.names)

if max_len:

self.len = min(max_len, self.len)

print('Using dataset:', self.len)

print('')

def __getitem__(self, index):

try:

y = Image.open(self.names[index]).convert('RGB')

except:

del self.names[index]

return self.__getitem__(index)

if y.size[0] * y.size[1] > self.input_size[0] * self.input_size[1]:

if y.size[0] > y.size[1]:

y = y.resize((self.input_size[0], self.input_size[0] * y.size[1] // y.size[0]))

else:

y = y.resize((self.input_size[1] * y.size[0] // y.size[1], self.input_size[1]))

y = self.transform([y])[0]

x = self.noisy(y)

#y = cv2.convertScaleAbs(np.asarray(y))

x = self.piltotensor(x)

y = self.piltotensor(y)

return x, y

def noisy(self, image):

image.save(self.save_path + r'\cash_img.jpg', quality=self.quality)

image = Image.open(self.save_path + r'\cash_img.jpg').convert('RGB')

return image

def __len__(self):

return self.len

(**All details of code at the bottom. **)

**Problem 1:**

After I trained model and test it, output looks normal in general, BUT it have artifacts on edges of image.

Example (input/output/target - from tests):

This artfacts small: ~ 5 - 20 px, and I could leave this, but if I don't upscale whole image (I cant do this with big img like full hd to 4k), I upscale parts of image. So, in this situation this small artifact is more important.

Exaple:

**Problem 2:**

I have artifacts looks like gauss noise. I thinks that it because my dataset is not best, I made it myself, and he have not many images with some colors (so artifacts meets on images which have seldom colors). But may be it have artifacts by another thing, therefore mentioned this.

*Now I have my dataset (100 000 images) + another dataset (350 000 images), but not use this always, because it is too long*

Example (model output/target with gauss noise) - it's after 100 000 images with 5 epochs:

*target is bigger beacuse I resize inputs for the model, so that give max 800x800 image (other OOM error)*

**My question for both problems:** Can I fix it and how? I just need to have more training, or something else? Or I have mistakes on layers, or preparing my dataset? I can't check it myself because on my code with my computer all dataset runs 30 days. XD

**IMPORTANT:** If you saw discuss with same question and with decision, you don't have to explain it to me, just give the link to that, and I'll check it.

And if you see some mistakes with optimization (I know - they are), please indicate this if it's not difficult for you. It very important for me, because... Come on, 30 DAYS...

**My code**

"ngf" I always do 64.

Model (example for 4x).

Model's layers always looks like :

self.g0 = nn.Sequential(

#nn.Dropout2d(p=0.2),

nn.Conv2d(3, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

)

self.g1 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True)

)

self.main1 = nn.Sequential(

nn.ConvTranspose2d(ngf * 4, ngf * 4, 4, stride=2, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

)

self.g2 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

)

self.main2 = nn.Sequential(

nn.ConvTranspose2d(ngf * 4, ngf, 4, stride=2, padding=1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

nn.Conv2d(ngf, 3, 3, stride=1, padding=1, bias=False),

nn.Tanh()

)

Forward function like this:

def forward(self, X):

X = self.g0(X)

X = self.g1(X) + X

X = self.main1(X)

X = self.g2(X) + X

X = self.main2(X)

return X

And training step:

def training_step(self, batch, batch_idx):

x, _ = batch

x = x.view(self.hparams['batch_size'], x.size()[-3], x.size()[-2], x.size()[-1]).to(self.device)

#x = F.interpolate(x, size=(int(y[0]), int(y[1])), mode='bicubic', align_corners=False)

g = self.generator(F.interpolate(x, size=(x.size()[-2] // self.increase, x.size()[-1] // self.increase), mode='bicubic', align_corners=False))

#########################____GRADS____############################

self.generator.zero_grad()

g_real_loss = criterion(g, F.interpolate(x, size=(x.size()[-2] // self.increase * self.increase, x.size()[-1] // self.increase * self.increase), mode='bicubic', align_corners=False))

g_real_loss.backward(retain_graph=True)

self.opt_g.step()

###################################################################

Exaple for "remove defects":

Model's layers:

self.ngf = ngf

self.g0 = nn.Sequential(

#nn.Dropout2d(p=0.2),

nn.Conv2d(3, ngf*4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf*4),

nn.ReLU(inplace=False),

)

self.g1 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

nn.Conv2d(ngf * 4, ngf *4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False)

)

self.main1 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

# state size. (ngf*8) x 4 x 4

)

self.g2 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

nn.Conv2d(ngf * 4, ngf * 4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=False),

)

self.main2 = nn.Sequential(

nn.Conv2d(ngf * 4, ngf*4, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(ngf*4),

nn.ReLU(inplace=False),

nn.Conv2d(ngf*4, 3, 3, stride=1, padding=1, bias=False),

nn.Tanh()

)

And training step, here I have 3 versions of this step, where I just train whole resized image,

where I cut image and train by these parts, or I cut image, put these parts into forward, but get loss by criterion of all parts and whole target image (I cut it double, because firstly I cut for exclude OOM error, and then train by parts). I choose this way to reduce resources consumption by forward function.

*I commented parts of old step versions*

def training_step_crop(self, batch, batch_idx):

x, y = batch

big_res = max(round(x.size()[-2] / self.hparams['max_input'][0] + 0.5), round(x.size()[-1] / self.hparams['max_input'][1] + 0.5))

for r0 in range(big_res):

for r1 in range(big_res):

big_tmp_x = torch.chunk(x,big_res,dim=-2)[r0]

big_tmp_x = torch.chunk(big_tmp_x,big_res,dim=-1)[r1]

big_tmp_y = torch.chunk(y,big_res,dim=-2)[r0]

big_tmp_y = torch.chunk(big_tmp_y,big_res,dim=-1)[r1]

res = max(round(big_tmp_x.size()[-2] / self.hparams['max_crop'][0] + 0.5), round(big_tmp_x.size()[-1] / self.hparams['max_crop'][1] + 0.5))

outs = []

for it0 in range(res):

out0 = []

for it1 in range(res):

tmp_x = torch.chunk(big_tmp_x,res,dim=-2)[it0]

tmp_x = torch.chunk(tmp_x,res,dim=-1)[it1]

#tmp_y = torch.chunk(y,res,dim=-2)[it0]

#tmp_y = torch.chunk(tmp_y,res,dim=-1)[it1]

#print('Memory:', get_gpu_memory_map()) - I chaked memory...

tmp_x = tmp_x.view(1, 3, tmp_x.size()[-2], tmp_x.size()[-1]).to(self.device)

#tmp_y = tmp_y.view(1, 3, tmp_y.size()[-2], tmp_y.size()[-1]).to(self.device)

tmp_x = self.generator(tmp_x)

out0.append(tmp_x.cpu())

#########################__GENERATOR__#############################

#self.generator.zero_grad()

#loss = self.criterion(tmp_x, tmp_y)

#loss.backward(retain_graph=True)

#self.opt.step()

###################################################################

del tmp_x

#del tmp_y

outs.append(torch.cat(out0, 3))

del out0

outs = torch.cat(outs, 2)#.clone()

#####################################

self.generator.zero_grad()

loss = self.criterion(outs.cpu(),big_tmp_y)

loss.backward()

self.opt.step()

#####################################

del outs

torch.cuda.empty_cache()

#with torch.cuda.device('cuda'):

#torch.cuda.empty_cache()

My dataset (~same for both models):

"RandomPilTransforms" - is my class of same flips and deformations for sequential of images (so, here it useless, but still...)

I use save image with small quality on JPG and load it again, to simulate JPG defects, because I didn't find function, which do this without save. If you know the same functions, I'll be glad to hear about it.

class MyDataset(Dataset):

def init(self, path, input_size, quality=50, max_len=False, save_path=r’data\cash’):

RPTargs = {

'perspective': {

'deformation': 0.5,

'chance': 0.5,

'resample': Image.BICUBIC,

'fill': 0,

'fillcolor': None

},

'flip_horizontal': 0.5,

'flip_vertical': 0.5,

'rotate_right': 0.5,

'rotate_left': 0.5

}

self.transform = RandomPilTransforms(**RPTargs)

self.path = path

self.quality = quality

self.save_path = save_path

self.piltotensor = transforms.ToTensor()

self.input_size = input_size

self.names = []

for dirpath,_,filenames in os.walk(path):

for f in filenames:

self.names.append(os.path.abspath(os.path.join(dirpath, f)))

random.shuffle(self.names, random.seed())

print('')

print('All dataset:', len(self.names))

self.len = len(self.names)

if max_len:

self.len = min(max_len, self.len)

print('Using dataset:', self.len)

print('')

def __getitem__(self, index):

try:

y = Image.open(self.names[index]).convert('RGB')

except:

del self.names[index]

return self.__getitem__(index)

if y.size[0] * y.size[1] > self.input_size[0] * self.input_size[1]:

if y.size[0] > y.size[1]:

y = y.resize((self.input_size[0], self.input_size[0] * y.size[1] // y.size[0]))

else:

y = y.resize((self.input_size[1] * y.size[0] // y.size[1], self.input_size[1]))

y = self.transform([y])[0]

x = self.noisy(y)

#y = cv2.convertScaleAbs(np.asarray(y))

x = self.piltotensor(x)

y = self.piltotensor(y)

return x, y

def noisy(self, image):

image.save(self.save_path + r'\cash_img.jpg', quality=self.quality)

image = Image.open(self.save_path + r'\cash_img.jpg').convert('RGB')

return image

def __len__(self):

return self.len