I have two computers, which installed Pytorch with Conda. :

- One with Intel CPU + 2 TitanX. (Say this is Intel Conputer)

- One with AMD threadripper + 2 1080Ti (Say this is AMD computer).

I tested the same code, with the same model, on the same dataset, but the results from two computer are different.

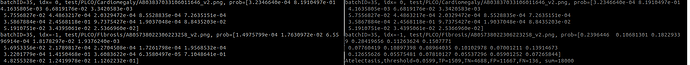

Let say if I run with a batch 512, then only the first haft (256 results) are matching between two computers, while the latter haft are different. Here is the screen shot of the output of the first(idx=0) and the last(idx=-1) samples in a batch:

The results on the left are from Intel Computer (which are correct), and the AMD on the right.

Does anyone have the same problem, and know how to solve it?

Thanks in advance.