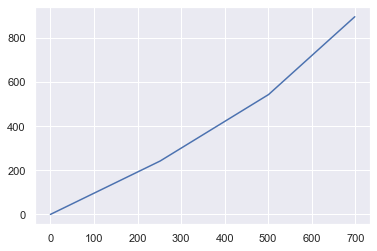

Note that unlike other questions, this is not about any RNN structure. I wish to create a model that has changing gradients, and will look like below. The breakpoints are manually supplied.

The model that I have created is as follows:

class Trend(nn.Module):

"""

Broken Trend model, with breakpoints as defined by user.

"""

def __init__(self, breakpoints):

super().__init__()

self.bpoints = breakpoints[None, :]

self.init_layer = nn.Linear(1,1) # first linear bit

# extract gradient and bias

w = self.init_layer.weight

b = self.init_layer.bias

self.params = [[w,b]] # save it to buffer

if len(breakpoints>0):

# create deltas which is how the gradient will change

deltas = torch.randn(len(breakpoints)) / len(breakpoints) # initialisation

self.deltas = nn.Parameter(deltas) # make it a parameter

for d, x1 in zip(self.deltas, breakpoints):

y1 = w *x1 + b # find the endpoint of line segment (x1, y1)

w = w + d # add on the delta to gradient

b = y1 - w * x1 # find new bias of line segment

self.params.append([w,b]) # add to buffer

# create buffer

self.wb = torch.zeros(len(self.params), len(self.params[0]))

def __copy2array(self):

"""

Saves parameters into wb

"""

for i in range(self.wb.shape[0]):

for j in range(self.wb.shape[1]):

self.wb[i,j] = self.params[i][j]

def forward(self, x):

# get the line segment area (x_sec) for each x

x_sec = x >= self.bpoints

x_sec = x_sec.sum(1)

self.__copy2array() # copy across parameters into matrix

# get final prediction y = mx +b for relevant section

return x*self.wb[x_sec][:,:1] + self.wb[x_sec][:,1:]

However, once I attempt to train it I get the error RuntimeError: Trying to backward through the graph a second time, but the buffers have already been freed. Specify retain_graph=True when calling backward the first time.

I obtained the above plot by doing:

time = torch.arange(700).float()[:,None]

y_pred = model(time)

plt.plot(time, y_pred.detach().numpy())

plt.show()

So we know the forward pass is working as expected. However the backward pass is not quite working. Was wondering what I need to change to get it working.

If you’re wondering why __copy2array is being used, when I tried to use torch.Tensor(self.params) it destroyed the gradients in those parameters. Thanks in advance.