Hi ,

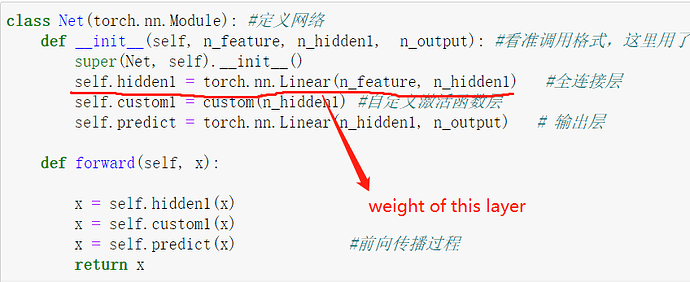

The linear layer is actually a matrix(n_feature x n_hidden1), is not a vector. I show below how I can stop training of W[0,0].

model.hidden1.weight[0, 0].requires_grad = False

Likewise, you can make other weights to False if you do not want to learn them.

EDIT: Frank pointed a mistake in this solution. requires_grad can only be applied for a whole tensor, not on an individual element.

Thanks

Hi Pranavan (and Jin)!

No, this won’t do what you want. requires_grad applies to entire

tensors and not individual elements. And to prevent unexpected

errors, pytorch won’t let you do this.

Probably the easiest way to freeze part of a tensor is to store the

value of the element you want frozen, let the optimizer modify the

entire tensor, and then restore the original value of the element

you want frozen.

Thus:

>>> import torch

>>> torch.__version__

'1.9.0'

>>>

>>> _ = torch.manual_seed (2021)

>>>

>>> lin = torch.nn.Linear (2, 3)

>>> lin.weight[0, 0].requires_grad = False

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

RuntimeError: you can only change requires_grad flags of leaf variables. If you want to use a computed variable in a subgraph that doesn't require differentiation use var_no_grad = var.detach().

>>>

>>> opt = torch.optim.SGD (lin.parameters(), lr = 0.1)

>>>

>>> lin.weight

Parameter containing:

tensor([[-0.5226, 0.0189],

[ 0.3430, 0.3053],

[ 0.0997, -0.4734]], requires_grad=True)

>>> w00 = lin.weight[0, 0].item()

>>> lin (torch.ones (2)).sum().backward()

>>> opt.step()

>>> lin.weight

Parameter containing:

tensor([[-6.2264e-01, -8.1068e-02],

[ 2.4303e-01, 2.0531e-01],

[-3.3811e-04, -5.7337e-01]], requires_grad=True)

>>> with torch.no_grad():

... lin.weight[0, 0] = w00

...

>>> lin.weight

Parameter containing:

tensor([[-5.2264e-01, -8.1068e-02],

[ 2.4303e-01, 2.0531e-01],

[-3.3811e-04, -5.7337e-01]], requires_grad=True)

Best.

K. Frank

The idea provided is very useful, the problem has been solved, thank you very much.

*The method provided is very useful, the problem has been solved, thank you very much.

Hi Frank,

It is a mistake. Thanks for pointing out.

But just have a quick question about storing the weights and retrieving them back after training. Weights w3-w100 are used for training the whole network ie, these weights influence the training of some other weights in the network. This is not intended from the original question.

The gradients should not flow through the weights from w3-w100 in an ideal training.

Thanks