When I use num_workers >0, my threads get extremely slow randomly at the end of each iteration.

Here’s part of my code:

for epoch in range(1, num_epochs):

for i, (x_train, y_train) in enumerate(train_batch): # y_train stands for label

trainPhase_start_time = time.time()

print('trainPhaseEnd-NextTrainPhaseStart:', i, ' ', trainPhase_start_time -trainPhase_end_time)

x_train = x_train.type(torch.FloatTensor)

y_train = y_train.type(torch.FloatTensor)

x_train = x_train.to(device)

y_train = y_train.to(device)

predict_train = net(x_train)

loss = criterion(predict_train, y_train)

optimizer.zero_grad()

loss.backward() # backward

optimizer.step()

trainPhase_end_time = time.time()

print('trainPhaseStart-trainPhaseEnd:(single batch)', trainPhase_end_time - trainPhase_start_time)

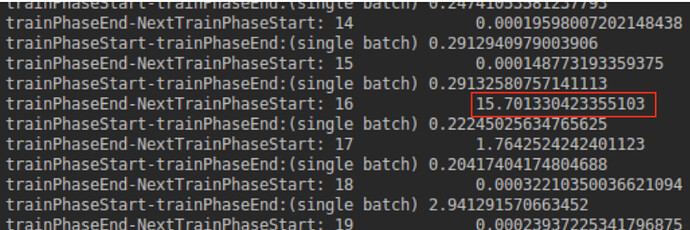

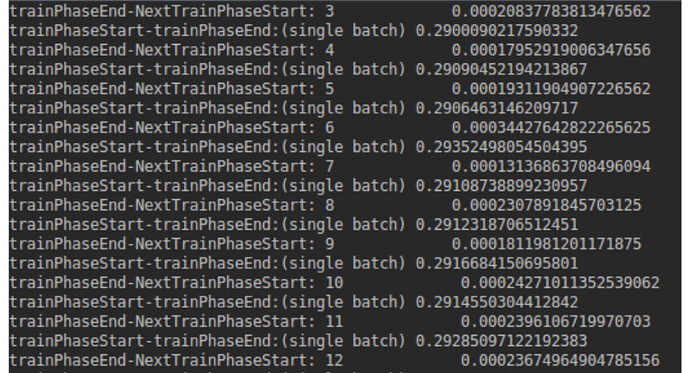

Every 16(num_worker) iterations, data loading may get stuck:

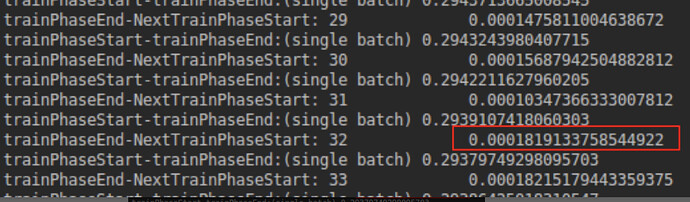

And after like 100 iterations, it may happen irregularity.