@apaszke Hi! I am sorry to reopen this thread. I have encountered a problem when I used the above method to load a GPU-trained model on CPU mode. The code fragment is:

import torch

encoder = torch.load('encoder.pt', map_location=lambda storage, loc: storage)

decoder = torch.load('decoder.pt', map_location=lambda storage, loc: storage)

encoder.cpu()

decoder.cpu()

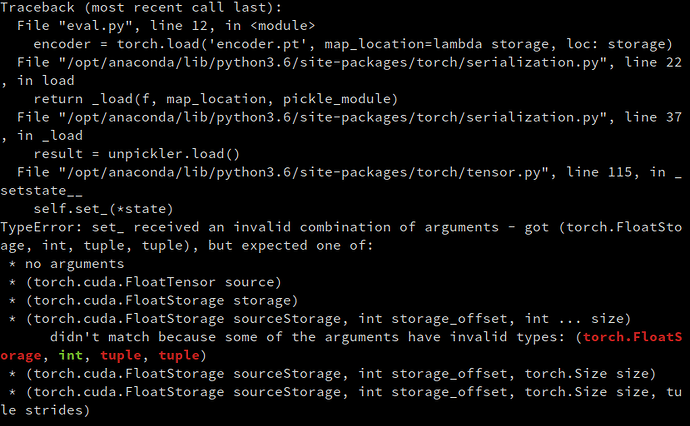

And the error I met was:

The full code can be viewed at seq2seq-translation/eval.py

How can I load a GPU-trained model on a CPU device (without any GPUs) correctly? Thank you for your great work!