I am trying to deploy a model in the browser for making predictions client side, once I export the model to onnx and I run in python everything works fine.

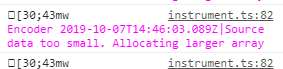

But the problem is when I run it in the browser, I always get the same prediction, and always get the following warning:

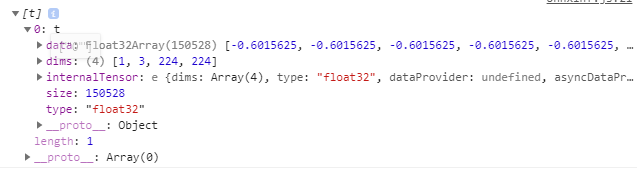

This is the input that causes the warning:

As we can see, the dims are according to the model export input (1,3,224,224). (It is a fine-tuned resnet network).

Actually, I have a bounding box which I crop using the canvas api of javascript (new canvas, resize to 224,224 and getImageData on the new canvas), saving the image from the UI seems okay… I don’t get why it doesn’t work as expected, the prediction gives exactly the same values independently of the input photo (this does not happen in python).

Thank you for the attention, I am sure that many of you will face the requests of deploying models on mobile/browser.