I am training an lstm model and currently I am performing random search.

Initially after each random search I was emptying the cache (torch.cuda.empty_cache()), however I was getting the OOM error after some number of random searches (usually around 3).

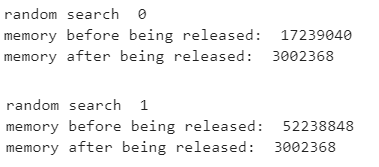

Then I read that in order for the memory to be freed I need to do del variable first. However, even after that I continued having the same issue. I am tracing the allocated gpu memory ( torch.cuda. memory_allocated()) and I can see that after each random search the memory is being freed. Although, when a new random search starts the memory allocated is a bit higher than the previous random search. I don’t think that this is caused by some variable that is not erased. Is there something that I am missing?