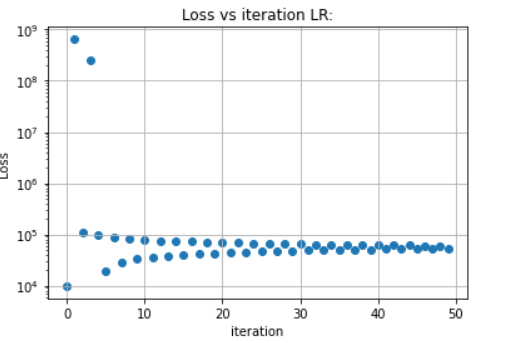

Hi! I wrote a network an I want to check it. I use one batch to see if it overfit or not… I plot loss during training … is this right?

model.to(device)

optimizer=torch.optim.SGD(model.parameters(),lr=0.00001)

Loss=np.array([])

for epoc in range(50):

print("***********",epoc)

for iteration,(x,r) in enumerate(Train_Loader):

optimizer.zero_grad()

y=Block(x,PHI)

output,out = model(r.to(device),y.to(device),PHI)

loss = my_loss(output.to(device), y.to(device),1,out.to(device))

Loss=np.append(Loss,[loss])

loss.backward()

optimizer.step()

end=time.time()

period=end-start

print('period', period)

start=time.time()

final loss is around 100000… I think its wrong…