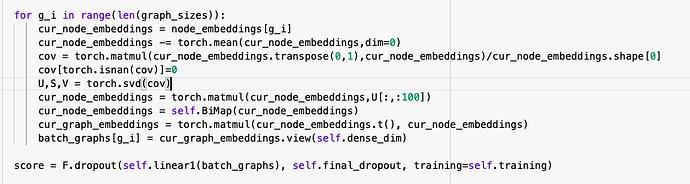

I was trying to do PCA using PyTorch, to reduce dimensionality.

I am getting the following error

RuntimeError: svd_cuda: the updating process of SBDSDC did not converge (error: 17)

This is occurring even if convert my tensor to cpu().

Also when I try removing NaNs in the input to torch.svd(), there is no error but loss(CrossEntropy) is NaN.

I would recommend to first try to fix the NaN loss.

Do your model outputs or targets contain any NaNs and are you expecting it during training?

No neither my model outputs nor targets contain any NaNs.I am not expecting it during training.I also tried gradient clipping but that made no difference.

Where do the NaNs in the input to svd come from, if neither from the output nor from the target?

How is node_embeddings calculated?

‘‘node_embeddings’’ is the output of a graph neural network, of shape (n x f) where n is the no. of nodes in a particular graph and f is the no.of features.n is around 30 and f is around 250 and I want to reduce f to 100.

The experiment runs successfully if I don’t do svd. That is if I directly pass all features through the subsequent fully connected layers, no errors occur, no NaNs are encountered.Only when I try to reduce f using PCA and then process further, this issue is occurring.

I might have misunderstood the issue, but in the first post you claim, that you tried to "remove the NaNs in the input to torch.svd()".

Based on your description now it seems that you are suddently getting NaNs in the model output, if you are trying to apply svd?

I “suspected” there might be NaNs and so zeroed them out just to be safe.

But then figured out that I am suddenly getting NaNs in the model output, when I am trying to apply svd.