I’m training a simple bidirectional LSTM model, taking sequence of words as input, and predicting a target variable for every word. The target variable is a float number in [0, 1]. The criterion is MSE loss. I used adam optimizer. Key code snippets are as follows (I omit model definition as it is a standard nn.Module and less relevant.)

optim = torch.optim.Adam(model.parameters(), lr=1e-3, weight_decay=0)

def compute_loss(output, target):

output = output.flatten()

target = target.flatten()

loss = nn.MSELoss()(output, target)

return loss

for epoch in range(10):

for (input, target, n_samples) in data_loader.yield_batch(batch_size=128):

model.zero_grad()

output, hidden = model(input, hidden=None). # use zero initial hidden states in model forward pass

loss = compute_loss(output, target)

loss.backward()

optimizer.step()

print("training loss: {}".format(loss))

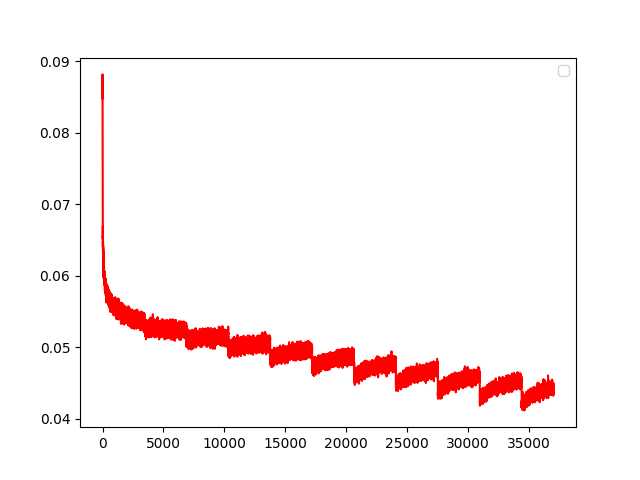

The training loss is visualized as following:

There is a clear periodic behavior. I checked and found the huge oscillation appears at end of each epoch. Am I calling the adam optimizer in a wrong way? Or does pytorch implementation of Adam has some automatic tunning of learning rates, etc?