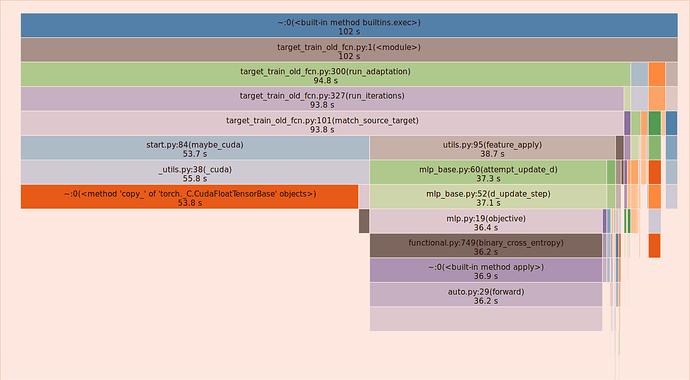

I experience same thing with a single GPU setup. Passing pin_memory=True to DataLoader does not seem to improve performance in any way. From cProfile it seems like torch._C.CudaFloatTensorBase._copy() consumes one third of all batch processing time, which is a lot! Both with and without pinned_memory(). Thank you!

So majority of time was spent moving variables to GPU and doing forward pass (this is GPU), backward pass took surprisingly little time.

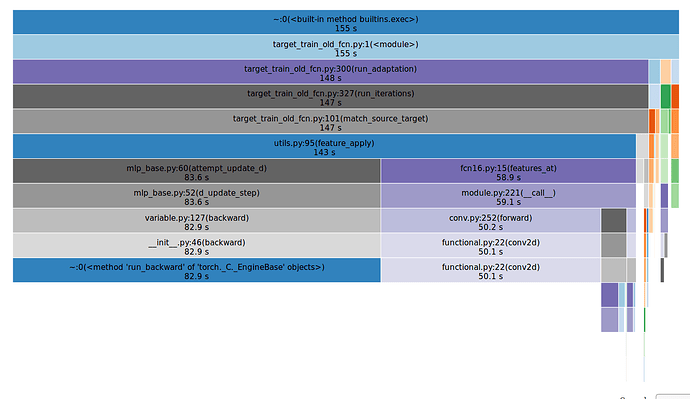

UPDATE: It turns out that if one passes CUDA_LAUNCH_BLOCKING=1 when running a script, profiling results are much more meaningful. Here, for example, majority of time is spent in backwards_run and forward, which makes sense.