Hello there,

I have a simple multiclass deep learning model with CrossEntropyLoss. In a total of 229 classes and 8k sample, I split them into 70% training, 20% validation and 10% training. On the fly whilst training and validation I calculate the precision and recall value to calculate dice and other metrics…

The precision value, looks wierd tho, unless the losses looks good… Why is the precision decreasing after several epochs when network is not overfitting yet, measured by the validation loss? I calculate the precision and recall as followed:

import sklearn.metrics as skl

precision = skl.precision_sorce(target, data, average=‘weighted’, zero_division=1)

recall = skl.recall_sorce(target, data, average=‘weighted’, zero_division=1)

Heres the full code if anyone wants it…: Google Colab

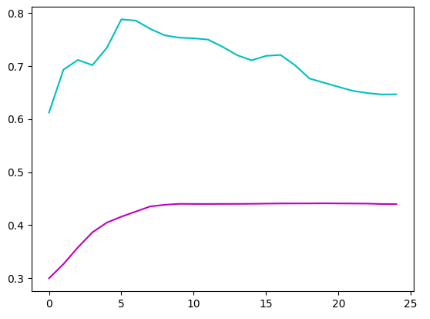

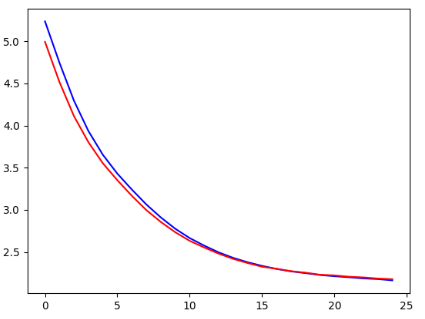

Below my training loss blue, validation loss red, training precision cyan and training recall purple of 25 epochs: