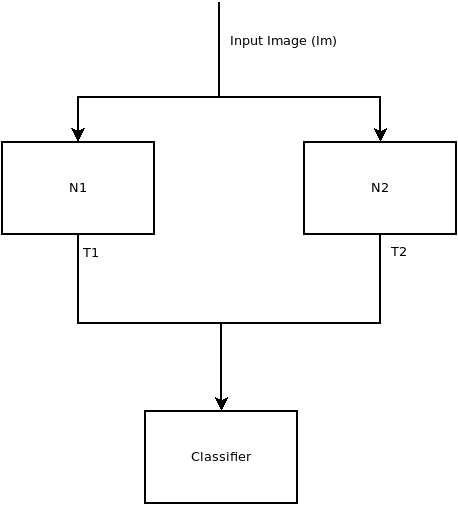

I have a question regarding how to implement the test phase in the system described on the image

I have trained both N1 and N2 to transform an input image (Im) wrt to Im’s class. If Im belongs to class 1 (C1) then I updated N1. If it belongs to C2 I update C2. At the same time I update the classifier to reckon the transformation which is inherent to each class.

Now, that the system is trained I want to predict new incoming samples. As I do not have any label that tells me which network (N1 or N2) has to transform Im, then I need to feed it to both and therefore I will have two transformed images T1 and T2. My classifier needs to assign two labels now during test but I need just one. In theory, if the input image belongs to class 2 then T1 is an image that the classifier should classify with low confidence whereas T2 should have a higher confidence and viceversa. My problem is that I dont know how to implement this idea. I have tried too many things. The output of my classifier is a vector of two values for each image. Therefore I will have a pair of vectors (C1_t1,C2_t1) and (C1_t2, C2_t2) indicating the probabilities of each transformed image T1, T2 of belonging to classes C1,C2. I need to find a way from those values to output the final class.

That’s a tricky question, as your training and testing routine are different, i.e. during training your model architecture was changing based on the target.

How did you implement the validation step or did you just train the model?

Once approach would be to use a small hold-out set to train another classifier on top of your current model to use the 4 predictions and output only the two classes.

I did not implement a validation step because I came up with the same question. I could use the GT label during validation, because if you do that you know which network N1 or N2 the input should be fed through and that’s not how it is supposed to be evaluated. Therefore, I have just trained the model.

Once approach would be to use a small hold-out set to train another classifier on top of your current model to use the 4 predictions and output only the two classes.

What do you mean by that? If I trained a classifier in top of both N1 and N2 to predict the label of the input am I leveraging the fact of N1 and N2 transforming the input differently with regard to the class it belongs to? I want to output only one class. So if input belongs to C1 the classifier should be used to see T1-like images and not T2-like as in training never happened a C1 input to be fed into N2. But actually it seems that my classifier did not learn about class features of the image but only about the transformation. I don’t know if my thoughts are correct.

That’s what I’m concerned about.

You are using the targets to switch between different transformations as well as different model paths, which would not be possible in your validation and test use cases.