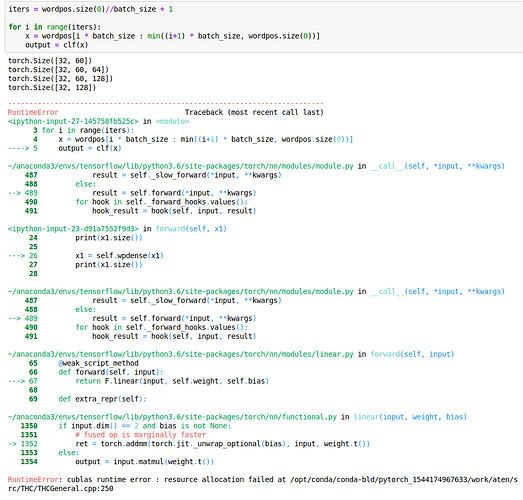

I’m learning how to use LSTMs/GRUs in pytorch and it seems that I always get the same 'Runtime Error: cublas runtime error : resource allocation failed…

I’m not sure what’s wrong with my code as I’ve tried to keep it to what I’ve learnt from the documentation from pytorch.

It seems that the problem occurs when hitting the nn.Linear layer after passing through the embedding layer, GRU layer, and after flattening x1.

Anyone able to help me out on this?

#Preprocess Data

#...

#...

import torch

import torch.nn as nn

import torch.optim as optim

device = 'cuda:0' if torch.cuda.is_available() else 'cpu'

epochs = 10

batch_size = 32

num_emb = 64

num_layers = 1

num_hidden = 128

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

self.wpemb = nn.Embedding(vocab_size, num_emb)

self.wpgru = nn.GRU(num_emb, num_hidden, num_layers, batch_first=True)

self.wpdense = nn.Linear(num_hidden, vocab_size)

def forward(self, x1):

print(x1.size())

x1 = self.wpemb(x1)

print(x1.size())

x1, hidden1 = self.wpgru(x1)

print(x1.size())

x1 = x1[:, -1, :]

print(x1.size())

x1 = self.wpdense(x1)

print(x1.size())

return x1

clf = Classifier()

clf.to(device)