Hi

I init 2 processes on 2 GPU with the followin code

command is:

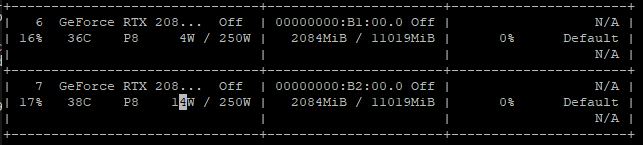

CUDA_VISIBLE_DEVICES=4,5 WORLD_SIZE=2 python -m torch.distributed.launch --nproc_per_node=2 --master_port 44744 train.py

after executing the above command, 2 processes will not exit.

if I comment out the for loop to enumerate loader_eval in the following code, 2 processes could successfully exit.

if I un-comment out the for loop to enumerate loader_eval in the following code, 2 processes could not exit.

is there any way to check where the process is pending? or how to check where the process is pending?

is there anything wrong with my code?

how to make those processes exit after the code finalized?

if args.distributed:

torch.cuda.set_device(args.local_rank)

torch.distributed.init_process_group(backend='nccl', init_method='env://')

args.world_size = torch.distributed.get_world_size()

args.rank = torch.distributed.get_rank()

logger_trainer_pim.info('----------> world_size == {}, rank=={}, local_rank == {}, gpu index =={}'.format(args.world_size, args.rank, args.local_rank, args.device.index))

logger_trainer_pim.info('Training in distributed mode with multiple processes, 1 GPU per process. Process %d, total %d.' % (args.rank, args.world_size))

else:

logger_trainer_pim.info('Training with a single process on 1 GPU.')

assert args.rank >= 0

dataset_eval = create_dataset(

args.dataset, root=args.data_validate_dir, split=args.val_split, is_training=False, batch_size=args.batch_size)

loader_eval = create_loader(

dataset_eval,

input_size=data_config['input_size'],

batch_size=args.validation_batch_size_multiplier * args.batch_size,

is_training=False,

use_prefetcher=args.prefetcher,

interpolation=data_config['interpolation'],

mean=data_config['mean'],

std=data_config['std'],

num_workers=args.num_workers,

distributed=args.distributed,

crop_pct=data_config['crop_pct'],

pin_memory=args.pin_mem,

)

print('------------------------------------------>')

print(args)

for batch_idx, (input, target) in enumerate(loader_eval):

if batch_idx>=1: break

print('-------------------------> enumerate loader_eval done.')

return {"init": 0.888, "final": 1}