I have been trying to make the entire starting columns in the weight matrix to zero. But after the training, all weight matrices look same. Please help me with a better way in order to achieve this.

#My code::

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

import numpy as np

input_size = 784

hidden_size = 500

num_classes = 10

num_epochs = 3

batch_size = 256

learning_rate = 0.01

a =

b =

c =

d =

e =

f =

x =

train_dataset = torchvision.datasets.MNIST(root=‘…/…/data’,

train=True,

transform=transforms.ToTensor(),

download=True)

test_dataset = torchvision.datasets.MNIST(root=‘…/…/data’,

train=False,

transform=transforms.ToTensor())

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=False)

class NeuralNet(nn.Module):

def init(self, input_size, hidden_size, num_classes):

super(NeuralNet, self).init()

self.fc1 = nn.Linear(input_size, hidden_size, bias=False)

nn.init.normal_(self.fc1.weight)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(hidden_size, num_classes,bias=False)

def forward(self, x):

out = self.fc1(x)

out = self.relu(out)

out = self.fc2(out)

return out

model = NeuralNet(input_size, hidden_size, num_classes).to(device)

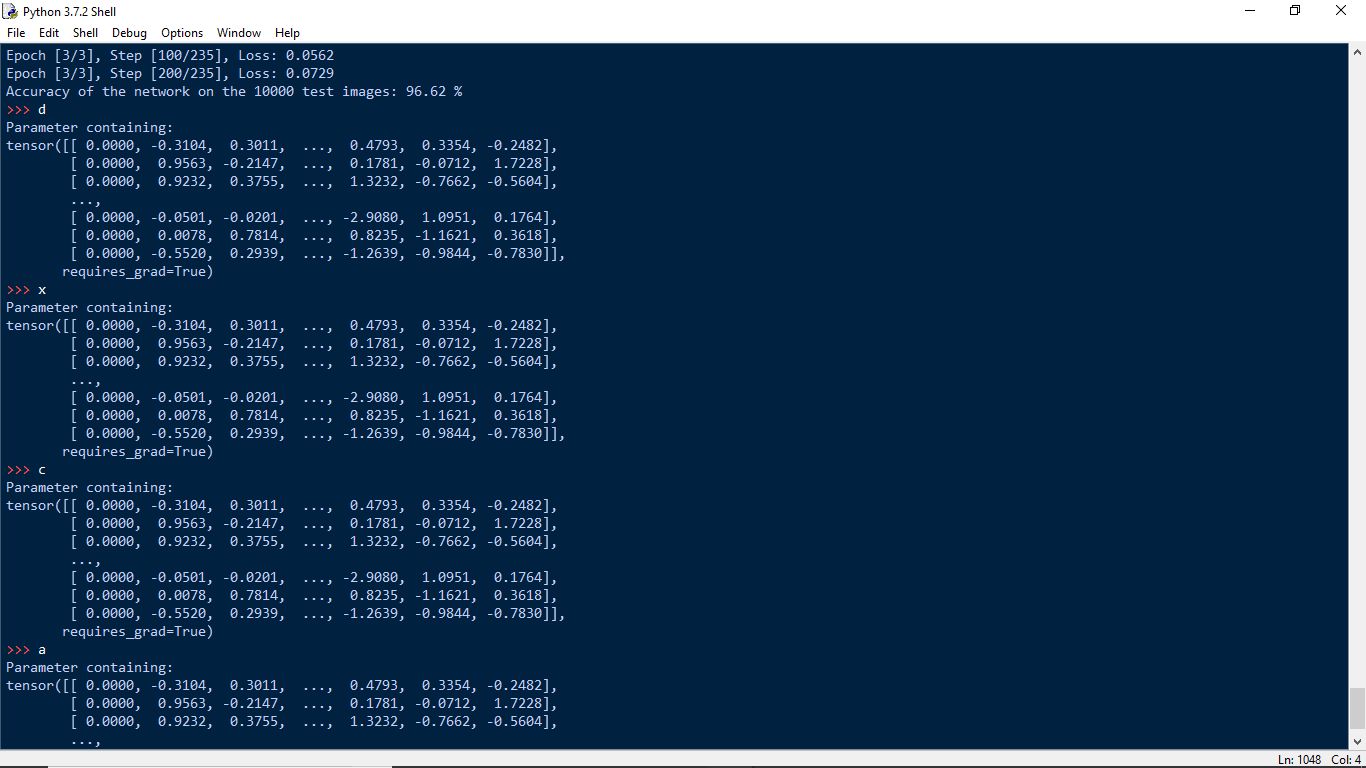

a=model.fc1.weight

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, weight_decay=0)

total_step = len(train_loader)

for epoch in range(num_epochs):

for i, (images, labels) in enumerate(train_loader):

images = images.reshape(-1, 28*28).to(device)

labels = labels.to(device)

if epoch == 0 & i==0:

with torch.no_grad():

d=model.fc1.weight

d[:,0:1]=0

model.fc1.weight.data=d

x=model.fc1.weight

if epoch == 1:

b=model.fc1.weight

if epoch == 2:

c=model.fc1.weight

if epoch == 3:

e=model.fc1.weight

if epoch == 4:

f=model.fc1.weight

outputs = model(images)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (i+1) % 100 == 0:

print ('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}'

.format(epoch+1, num_epochs, i+1, total_step, loss.item()))

b=model.fc1.weight.detach().numpy()

with torch.no_grad():

correct = 0

total = 0

for images, labels in test_loader:

images = images.reshape(-1, 28*28).to(device)

labels = labels.to(device)

outputs = model(images)

loss = criterion(outputs, labels)

#b.append(loss.detach().numpy())

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: {} %'.format(100 * correct / total))

torch.save(model.state_dict(), ‘model.ckpt’)