my profile code is as follows

net = torchvision.models.resnext50_32x4d(pretrained=True).cuda()

net.train()

with torch.autograd.profiler.profile(use_cuda=True) as prof:

predict = net(input)

print(prof)

code and results can be found at: https://drive.google.com/open?id=1vyTkqBpQwUvCSnGjjJPdzIzLTEfJF8Sr

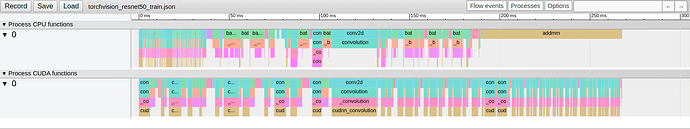

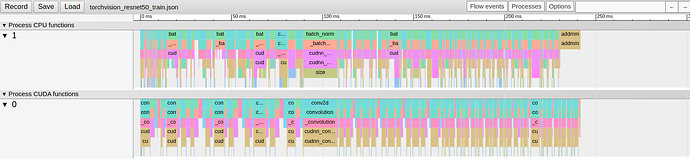

here are the snippet results for pytorch 1.4 and 1.6 :

...

is_leaf 0.00% 1.850us 0.00% 1.850us 1.850us 0.00% 1.024us 1.024us 1 []

is_leaf 0.00% 2.119us 0.00% 2.119us 2.119us 0.00% 2.048us 2.048us 1 []

max_pool2d_with_indices 0.05% 479.919us 0.05% 479.919us 479.919us 0.19% 2.061ms 2.061ms 1 []

--------------------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- -----------------------------------

Self CPU time total: 1.034s

CUDA time total: 1.097s

accuracy 0.687500

pytorch

torch.__version__ = 1.6.0.dev20200516

torch.version.cuda = 10.2

torch.backends.cudnn.version() = 7605

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.enabled = True

torch.backends.cudnn.deterministic = False

torch.cuda.device_count() = 1

torch.cuda.get_device_properties() = _CudaDeviceProperties(name='TITAN X (Pascal)', major=6, minor=1, total_memory=12192MB, multi_processor_count=28)

torch.cuda.memory_allocated() = 0 GB

torch.cuda.memory_reserved() = 8 GB

cudnn_convolution 0.12% 533.067us 0.12% 533.067us 533.067us 0.63% 4.750ms 4.750ms 1 []

add 0.00% 21.091us 0.00% 21.091us 21.091us 0.00% 9.215us 9.215us 1 []

batch_norm 0.07% 324.928us 0.07% 324.928us 324.928us 0.23% 1.723ms 1.723ms 1 []

_batch_norm_impl_index 0.07% 318.668us 0.07% 318.668us 318.668us 0.23% 1.721ms 1.721ms 1 []

--------------------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- -----------------------------------

Self CPU time total: 457.948ms

CUDA time total: 750.666ms

accuracy 0.687500

pytorch

torch.__version__ = 1.4.0

torch.version.cuda = 10.1

torch.backends.cudnn.version() = 7603

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.enabled = True

torch.backends.cudnn.deterministic = False

torch.cuda.device_count() = 1

torch.cuda.get_device_properties() = _CudaDeviceProperties(name='TITAN X (Pascal)', major=6, minor=1, total_memory=12192MB, multi_processor_count=28)

torch.cuda.memory_allocated() = 0 GB

torch.cuda.memory_reserved() = 8 GB

I also have similar observations for another machine using GTX 1080Ti.

Why is there a huge difference in the results?