Could you post the installation log so that we could have a look, please?

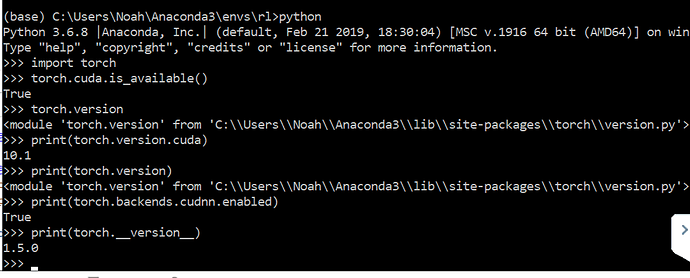

When I tried to install pytorch for cuda 10.2. torch.cuda.is_available() return false.

And in both cases(torch 10.1/10.2) I am getting AssertionError: Torch not compiled with CUDA enabled.

Thanks. I meant the installation logs from the binary install (e.g. pip install ... or conda install ...).

Anyway, since torch.version.cuda prints a version, the CUDA packages should be installed.

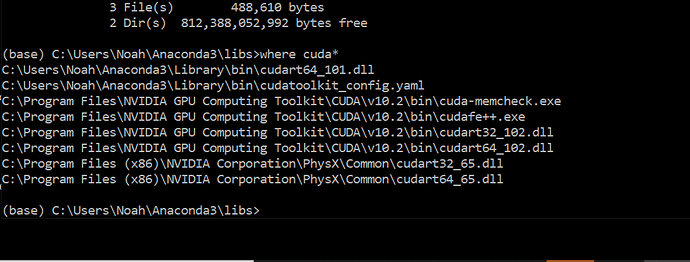

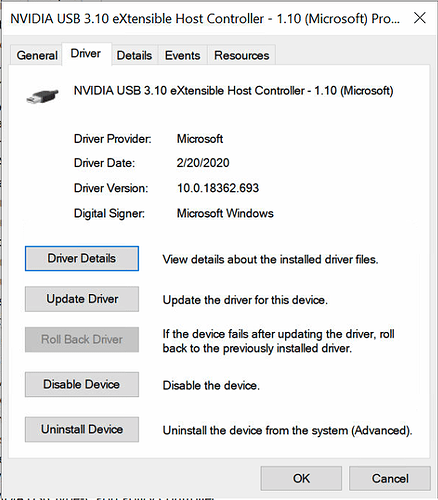

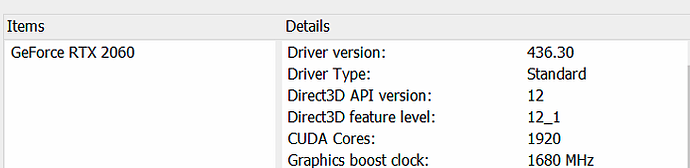

Which NVIDIA driver and GPU are you using?

I have used this one… conda install pytorch torchvision cudatoolkit=10.1 -c pytorch

Could you check the driver in the “NVIDIA Control Panel” as described here as the second method?

For CUDA10.2, you might need to update to >= 440.33, but 10.1 should work.

I’m using the version numbers for Linux as I’m not really familiar with Windows.

Were you able to use the GPU before and are running into these issues after upgrading or is this your first installation?

This is my first installation. I think I have installed CUDA10.2 and then installed pytorch : conda install pytorch torchvision cudatoolkit=10.1 -c pytorch. It might be because of CUDA10.2 which does’t support driver 436.30. I will uninstall cuda10.2 and install 10.1. Would that work?

You don’t need to do that. We don’t rely the CUDA you installed. You have two options:

- Update your GPU driver

- Install a CUDA 10.1 variant of package by

conda uninstall pytorchandconda install pytorch torchvision cudatoolkit=10.1 -c pytorch.

Hi Pavlos, My CUDA capability is 6.1, still I face the same problem as yours. Any suggestions?

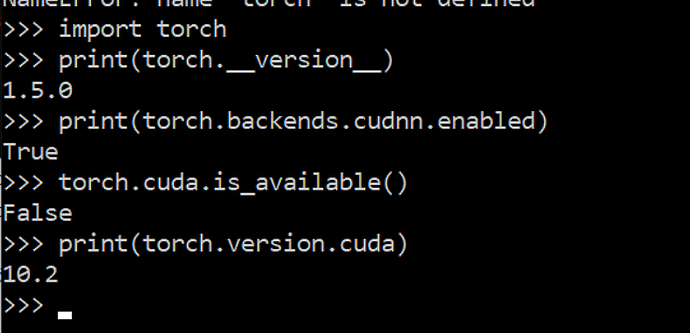

Hi All, I am facing the same problem. I realized that when I installed PyTorch using the command - conda install pytorch torchvision cudatoolkit=10.2 -c pytorch it is not installing the CUDA compiled torch. I confirmed it using

>>> torch.has_cuda

False

The installation logs, for the above install command is as below -

environment location: F:\Softwares\anaconda

added / updated specs:

- cudatoolkit=10.2

- pytorch

- torchvision

The following NEW packages will be INSTALLED:

ninja pkgs/main/win-64::ninja-1.9.0-py37h74a9793_0

pytorch pytorch/win-64::pytorch-1.5.0-py3.7_cpu_0

torchvision pytorch/win-64::torchvision-0.6.0-py37_cpu

My deviceQuery result shows -

Device 0: "GeForce MX250"

CUDA Driver Version / Runtime Version 10.2 / 10.2

CUDA Capability Major/Minor version number: 6.1

Texture alignment: zu bytes

Concurrent copy and kernel execution: Yes with 5 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

CUDA Device Driver Mode (TCC or WDDM): WDDM (Windows Display Driver Model)

Device supports Unified Addressing (UVA): Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: No

Supports MultiDevice Co-op Kernel Launch: No

Device PCI Domain ID / Bus ID / location ID: 0 / 2 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 10.2, CUDA Runtime Version = 10.2, NumDevs = 1, Device0 = GeForce MX250

Result = PASS

Driver version - 441.22

The only different thing I have is - CUDA toolkit and anaconda are in different drives.

Update

When I tried with 10.1 variant of CUDA(conda install pytorch torchvision cudatoolkit=10.1 -c pytorch), just as @peterjc123 suggested, I still see that conda is trying to install non cuda version of pytorch -

The following packages will be downloaded:

package | build

---------------------------|-----------------

cudatoolkit-10.1.243 | h74a9793_0 300.3 MB

------------------------------------------------------------

Total: 300.3 MB

The following NEW packages will be INSTALLED:

ninja pkgs/main/win-64::ninja-1.9.0-py37h74a9793_0

pytorch pytorch/win-64::pytorch-1.5.0-py3.7_cpu_0

torchvision pytorch/win-64::torchvision-0.6.0-py37_cpu

The following packages will be DOWNGRADED:

cudatoolkit 10.2.89-h74a9793_1 --> 10.1.243-h74a9793_0

@ashwitha_d Actually, it is a bit tricky for you to switch from CPU build to CUDA build. conda install -c pytorch cudatoolkit=10.2 pytorch torchvision is not enough. You’ll also need to remove cpuonly by doing conda uninstall cpuonly.

@peterjc123 I see! I missed that step of uninstalling cpuonly. But it is a good point to note. Thanks for your reply.

How to do conda install pytorch torchvision cudatoolkit=10.1 -c pytorch

by pip?

From here- (assuming you want the latest and stable versions)

pip install torch==1.5.1+cu101 torchvision==0.6.1+cu101 -f https://download.pytorch.org/whl/torch_stable.html

How can I resolve the following error.

“RuntimeError: CUDA error: no kernel image is available for execution on the device”

My working environment is as follows

±----------------------------------------------------------------------------+

| NVIDIA-SMI 440.59 Driver Version: 440.59 CUDA Version: 10.2 |

|-------------------------------±---------------------±---------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce GTX TIT… Off | 00000000:01:00.0 On | N/A |

| 27% 43C P8 26W / 250W | 426MiB / 6078MiB | 0% Default |

±------------------------------±---------------------±---------------------+

±----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 449 G /usr/lib/xorg/Xorg 209MiB |

| 0 1045 G /usr/lib/xorg/Xorg 12MiB |

| 0 20266 G /usr/lib/xorg/Xorg 12MiB |

| 0 31467 C /home/remya/anaconda3/bin/python 177MiB |

±----------------------------------------------------------------------------+

Ubuntu 16.04

Thanks

Remya

The error can be raised if the CUDA code in your PyTorch installation wasn’t compiled for the compute capability of your device. Which GPU are you using and how did you install PyTorch?