Hi together,

I want to profile some AI-Ttraining.

I use the with with record_function block and “forward_backward” to summarize the called functions. This works fine for the CPU part. All the function which belongs to forward or backward are under the hood of the caption.

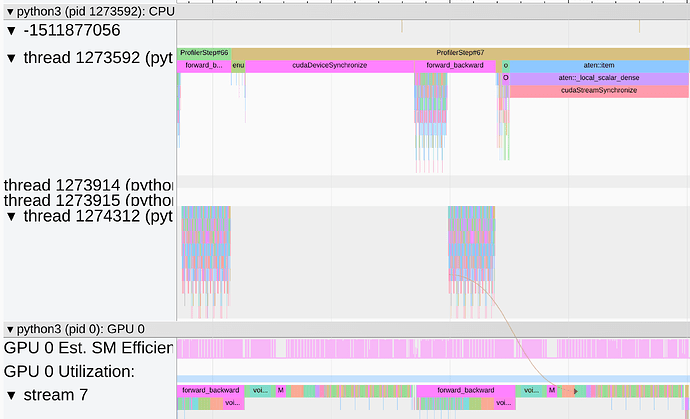

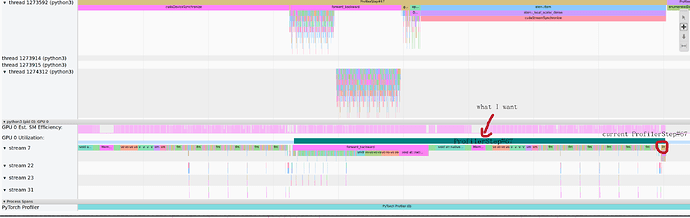

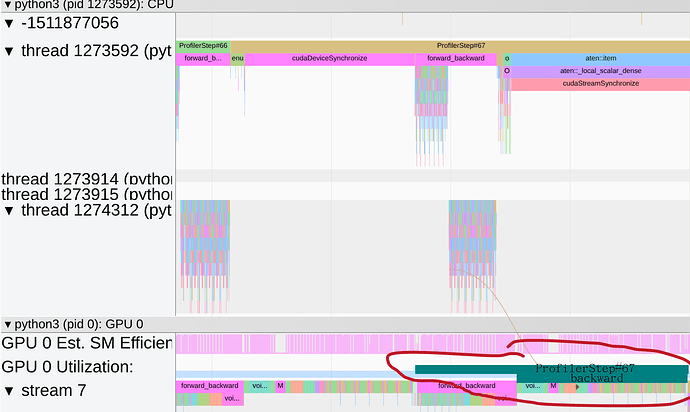

But at GPU, the things are screwed up. The forward part is correct under the hood of the “foward_backward” caption, but the whole backward part is seperate outside of the caption. The same problem with the profiler step. The “ProfilerStep#67” on the GPU consists only of a small subpart of the called kernels.

Has someone an idea, how it is possible that all under “ProfilerStep#67” launched kernels on the CPU are under this caption at the GPU and similar to the “forward_backward” caption?

Thanks!

I’m not sure if I understand your issue correctly, but keep in mind that CUDA operation are executed asynchronously. I.e. the host code will schedule the kernels inside the marked region while the actual workload could be executed outside of this block e.g. if other kernels are already running on the GPU. Is this what you are seeing?

Sorry for formulating bad and thanks for the fast answer.

My goal is to measure the total time the GPU took for the backward part and for a full step. So basically the time between the first and the last kernel call for forward/backward and the full step.

I want to read just one time from the trace. Is there any possibility to merge all the single kernel calls in the backward to one block? (first image) Atm if I read the ProfilerStep#67 time on the GPU, I get a super short time, because only a small part of the GPU calculation is part of it (second image). Is it possible to have one large block over the total first and last kernel call? If not is there any simple way to measure the time between the first and last kernel call in the backward part without synchronizing anything?

Second image:

First image: