Hey. Thanks for the reply! I did use it and while I did observe some improvement with fused fake quantization, it’s still worse than the model without any quantisation. Shouldn’t there be an improvement in the computational time?

(I will share the ss of the CPU time of fake fused quantized soon)

currently BackendConfig is not supported by eager mode quantization. it will take a long time for eager mode quantization to support this I think. I would recommend to use fx instead

no we don’t expect to see improvement in training time, since we are adding additional operations (fake quantize ops) to the model, but we do expect to see improvement for the converted model, which is a real quantized model.

Okay. So we are supposed to improvement in the converted model, during evaluation? I would really appreciate if you help me with another question, does increase in training time happen in other quantisation schemes too?

Okay. So we are supposed to improvement in the converted model, during evaluation?

Yes

I would really appreciate if you help me with another question, does increase in training time happen in other quantisation schemes too?

Yes, since we are fake quantizing some activation/weight Tensors in addition to running the original fp32 ops during quantization aware training.

i know, thanks, but i what to change bias type (to qint8)

I have a another question, i need to set scale to 1/2、1/4 、1/8 … 2^-n , where I need to change?

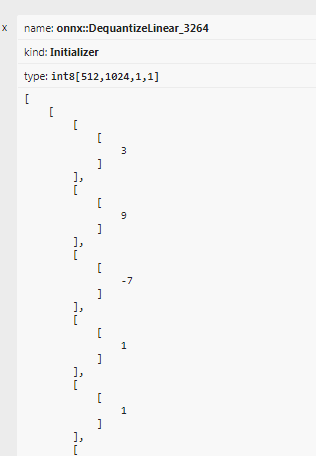

i can ouput the quantization float32 ? the data is the int , for example:

Okay. Thanks a lot! Really appreciate the help.

do you have a good idea?

currently it’s only supported in fx graph mode quantization, are you able to use that? (prototype) FX Graph Mode Post Training Static Quantization — PyTorch Tutorials 1.12.1+cu102 documentation

you would need to write a new observer or fake quantize module and customize calculate qparams: https://github.com/pytorch/pytorch/blob/master/torch/ao/quantization/observer.py#L294

actually we have a recent intern project that implements additive power of two quantization method, maybe you can take a look as well: https://github.com/pytorch/pytorch/tree/master/torch/ao/quantization/experimental

hai,lstm can be quantization? eager or fx?

we have support for eager mode quantization for lstm, through our custom module api, and recently @andrewor just added support for fx graph mode quant as well.

test for eager: pytorch/test_quantized_op.py at master · pytorch/pytorch · GitHub

test for fx: pytorch/test_quantize_fx.py at master · pytorch/pytorch · GitHub

also please open a new post for a new question, instead of relying to an unrelated post, so that other people can find it as well

ok , thank you , i just have a last question, in the coco dataset, the acc of QAT is good? maybe loss is big

we don’t have the numbers, I think it will depend on how you do QAT, but typically it should work reasonable well for vision models